Imagine a world where decisions affecting our lives—like a loan approval, a medical diagnosis, or even a prison sentence—are made by machines. Now picture asking, “Why did the AI say no?” and getting nothing but a blank stare in return. That’s not science fiction. It’s the unsettling reality of the black box problem in artificial intelligence. We trust AI with more and more power, but its decisions are often shrouded in mystery. For all its dazzling brilliance, AI sometimes leaves us in the dark, hungry for answers that never come.

The Rise of Artificial Intelligence

Artificial intelligence has surged into our daily lives with breathtaking speed. From recommending what movie you should watch next to helping doctors spot early signs of cancer, AI is making choices for us everywhere. These systems learn from mountains of data, spotting patterns and making decisions that often seem almost magical. But as their influence grows, so does our unease about how they actually work. The very complexity that makes them powerful also makes them hard to understand, even for the experts who build them.

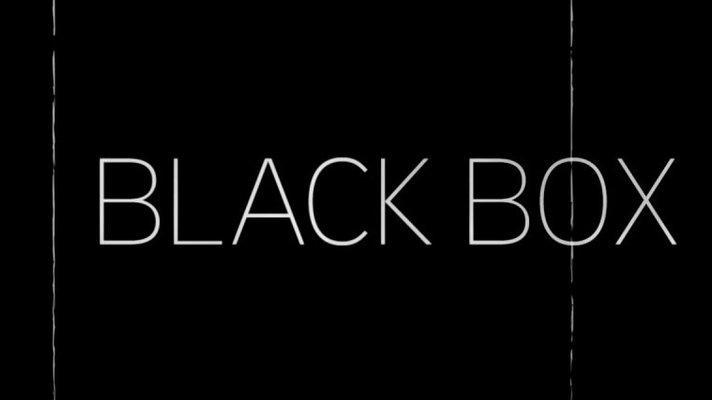

What Is the Black Box Problem?

The black box problem refers to the puzzling lack of transparency in how many AI systems make decisions. We can see what goes in—data, images, or questions—and what comes out—predictions, labels, or answers. But what happens in between is often invisible, hidden inside layers of mathematical calculations. It’s like tossing ingredients into a machine, pressing a button, and getting a cake, but never seeing the recipe. This opacity can be unsettling, especially when the stakes are high.

Why Are AI Models So Opaque?

Many modern AI systems, especially those using deep learning, are built on complex neural networks with thousands or even millions of interconnected parts. Each “neuron” in the network tweaks its settings in response to data, but these tweaks add up in ways that are difficult to track or explain. Unlike a traditional program, where each step can be traced and justified, AI models often operate with a logic that’s almost alien to human reasoning. Even the people who design these systems can struggle to explain their behavior.

Real-World Consequences of the Black Box

The black box problem isn’t just a technical curiosity—it has real impacts on people’s lives. Imagine being denied a job because an AI flagged you as a “bad fit,” but no one can tell you why. Or a doctor relying on AI to diagnose a patient, but unable to say what factors led to the recommendation. These mysteries can erode trust, fuel anxiety, and even lead to injustice. When decisions can’t be explained, people feel powerless, sparking frustration and fear.

The Importance of Explainability

Explainability means being able to say why an AI system made a particular choice. This isn’t just about curiosity; it’s about fairness and accountability. If we can’t explain decisions, we can’t fix mistakes or spot biases. For example, if an AI system for hiring consistently favors one group over another, we need to know why. Explainability also matters for safety. In high-stakes fields like medicine or self-driving cars, knowing how an AI makes decisions can be a matter of life or death.

Deep Learning: The Heart of the Black Box

Deep learning, the powerhouse behind many recent AI breakthroughs, is especially notorious for being a black box. These systems are inspired by the human brain, using layers of artificial neurons to process information. As data passes through these layers, it’s transformed in subtle, intricate ways. The result is astonishing accuracy in tasks like image recognition or language translation. But the downside is that it’s almost impossible to pinpoint exactly what the system “learned” or why it made a certain decision.

Examples of Black Box Failures

History is full of chilling examples where AI’s lack of transparency caused real harm. In one case, a medical AI failed to identify skin cancer in patients with darker skin because it had mainly been trained on lighter-skinned people. The system’s creators didn’t realize the bias until after patients were misdiagnosed. In another case, a criminal justice algorithm recommended harsher sentences to people from certain neighborhoods, reflecting and amplifying existing inequalities. These failures highlight the danger of trusting decisions we can’t explain.

The Limits of Human Intuition

One reason the black box problem is so challenging is that human intuition often fails to grasp the vast complexity of AI models. While we like to think we’re good at spotting patterns, AI can find correlations in data that are invisible to the naked eye. Sometimes, the patterns AI uses don’t make sense to us at all. This mismatch between machine logic and human reasoning makes it even harder to unpack how decisions are made.

Efforts to Open the Black Box

Scientists and engineers are racing to make AI more transparent. Techniques like feature importance analysis, saliency maps, and “explainable AI” models try to shine a light inside the black box. These methods aim to highlight which parts of the data were most influential in a decision, or to simplify complex models into more understandable chunks. While these tools are promising, they often provide only partial answers, and the gap between human understanding and machine reasoning persists.

Are Simpler Models the Solution?

Some experts argue that we should use simpler, more interpretable models whenever possible. Linear regression or decision trees, for instance, offer clear explanations for every choice they make. But there’s a trade-off: these models may not be as accurate as their more complex cousins. In fields where accuracy is critical—like cancer diagnosis or autonomous driving—relying solely on simple models might mean missing important insights. The challenge is finding the right balance between understanding and performance.

AI in Healthcare: Trust and Transparency

Healthcare is a domain where the black box problem is especially urgent. Doctors need to trust the tools they use, and patients deserve to know why a diagnosis was made. If an AI recommends a treatment but can’t explain its reasoning, doctors may hesitate to follow its advice, and patients may lose confidence. Researchers are working on ways to build more transparent medical AI, but progress is slow. The stakes in healthcare are simply too high for blind trust.

Algorithmic Bias and Social Impacts

When AI systems learn from biased data, they can perpetuate and even worsen social inequalities. If we can’t explain decisions, it’s nearly impossible to spot and correct these biases. A hiring algorithm trained on resumes from a mostly male workforce might unconsciously favor male candidates, locking women out of opportunities. Without transparency, these injustices can remain hidden, quietly shaping the future.

Regulatory Pressures and Legal Challenges

Governments around the world are waking up to the risks of black box AI. Laws like the European Union’s General Data Protection Regulation (GDPR) now require companies to provide explanations for automated decisions in some cases. Regulators are demanding more transparency, especially when AI is used in sensitive areas like finance or criminal justice. Meeting these legal obligations is a major challenge for companies that depend on complex, opaque models.

The Role of Trust in AI Adoption

Trust is the invisible glue that holds society together, and it’s just as vital in our relationship with AI. If people don’t trust the systems making decisions about their lives, they won’t use them. Lack of transparency breeds suspicion and resistance. This is why building explainable, trustworthy AI isn’t just a technical problem—it’s a social and ethical imperative. Without public confidence, even the most advanced AI will struggle to find acceptance.

When Black Boxes Are Unavoidable

Sometimes, the complexity of the problem demands a black box solution. Certain tasks—like analyzing millions of medical images or predicting weather patterns—are simply too intricate for simple models. In these cases, the benefits of powerful AI may outweigh the drawbacks of opacity. But this doesn’t mean we should give up on seeking explanations; it just means we need smarter ways to peek inside the box.

Transparency Versus Trade Secrets

Companies often keep their AI algorithms secret to protect their competitive edge. This creates a tension between the need for transparency and the desire to safeguard intellectual property. Too much openness could expose valuable business secrets, but too little transparency risks public backlash and regulatory trouble. Finding the right balance is a delicate dance, and one that’s still being choreographed.

The Human Cost of Unexplained Decisions

At the heart of the black box problem are real people—job seekers, patients, students—whose lives are shaped by decisions they can’t understand. The frustration, confusion, and helplessness this creates can’t be measured in equations. When we hand over power to machines without demanding explanations, we risk eroding our sense of agency and fairness. The human cost is impossible to ignore.

Emerging Solutions and Future Directions

Researchers are exploring new ways to make AI more transparent, from designing inherently interpretable models to developing sophisticated visualization tools. Some are experimenting with “model distillation,” which simplifies complex models into more understandable forms. Others are working on interactive AI systems that can answer questions about their choices. While progress is steady, the quest for true explainability is far from over.

The Path Forward: Balancing Power and Clarity

The black box problem challenges us to rethink how we design and use artificial intelligence. As AI systems grow more powerful, we must demand clarity, accountability, and fairness. This journey will require collaboration between engineers, ethicists, regulators, and the public. The stakes are too high to settle for mystery. Will we insist on answers, or accept the darkness inside the box?