In a dim, robotic lab, a new kind of scientist is quietly changing the rules. The prototype doesn’t wear goggles or pull night shifts; it runs on models, code, and manipulators that don’t get bored or blink. Its latest feat isn’t a single eureka moment but a cascade: dozens of brand‑new crystalline materials made in days rather than months, each one proposed, planned, and produced with minimal human nudging. The claim sounds grand, almost provocative – an AI scientist doing what people could not – yet the proof is in the throughput and the follow‑through. The pace is the story, and the story is only just beginning.

The Hidden Clues

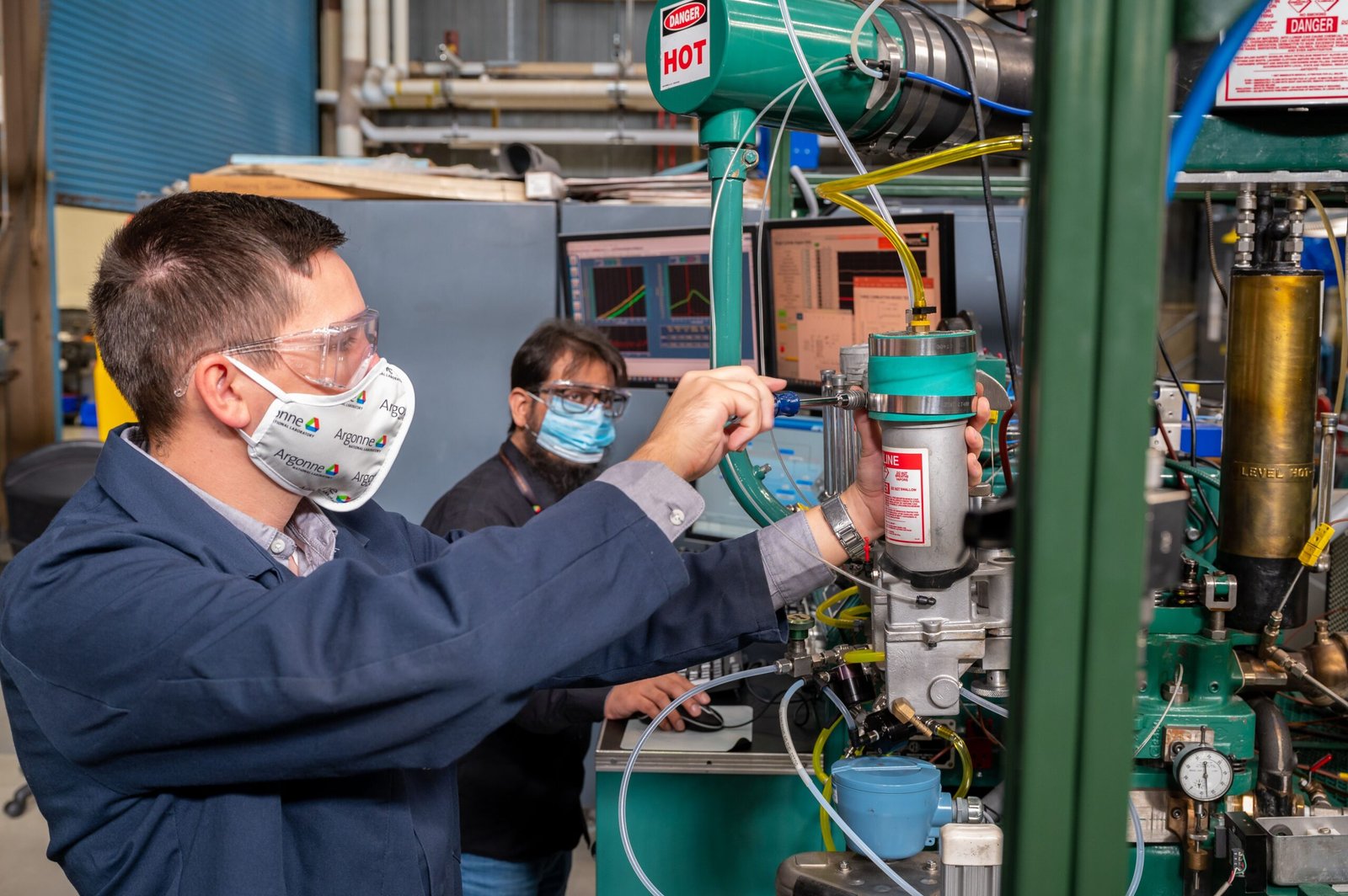

What do you call a scientist that never sleeps, reads everything, and tries a hundred ideas before your coffee cools? In materials labs, that scientist now looks like a closed loop: an AI that drafts a hypothesis, a planning system that writes a recipe, and robots that synthesize and test the result. When this “team of one” works, the lights don’t flicker with drama – datasets do, spitting out unexpected hits at a rate no grad student can match. The startling part isn’t that the system is smart; it’s that it’s relentless. The lab log fills with first‑ever compounds, the kind we used to chase for a season and sometimes miss altogether. That relentless loop has already produced more than forty previously unmade materials in just over two weeks, a success rate of roughly about seven out of ten attempts – an unheard‑of clip in traditional workflows.

Behind those hits stands an upstream engine that imagined millions of candidate crystals and flagged hundreds of thousands as stable enough to be worth a shot, effectively multiplying global materials knowledge in one sweep. The AI’s predictions didn’t stay pixels on a screen; they became powders, pellets, and diffractograms – evidence that the loop does more than guess. It builds.

From Ancient Tools to Modern Science

Science has always been a story of better instruments, from abaci to accelerators. In the last few years, learning systems joined the list, jumping from protein structure prediction to protein design and helping redefine what counts as a feasible target in biochemistry. That shift was serious enough to be recognized at the very top of science, underscoring how machine learning moved from helper to co‑driver.

Meanwhile, in genomics and molecular biology, new models began to decipher regulatory DNA and invent functional proteins that never appeared in nature, compressing years of trial‑and‑error into days of computation. If biology is a library, these systems learned to write convincing new chapters instead of just summarizing old ones. That mindset – generate, test, iterate – is exactly what now powers the AI scientist in the lab.

Inside the Machine: How an AI Scientist Works

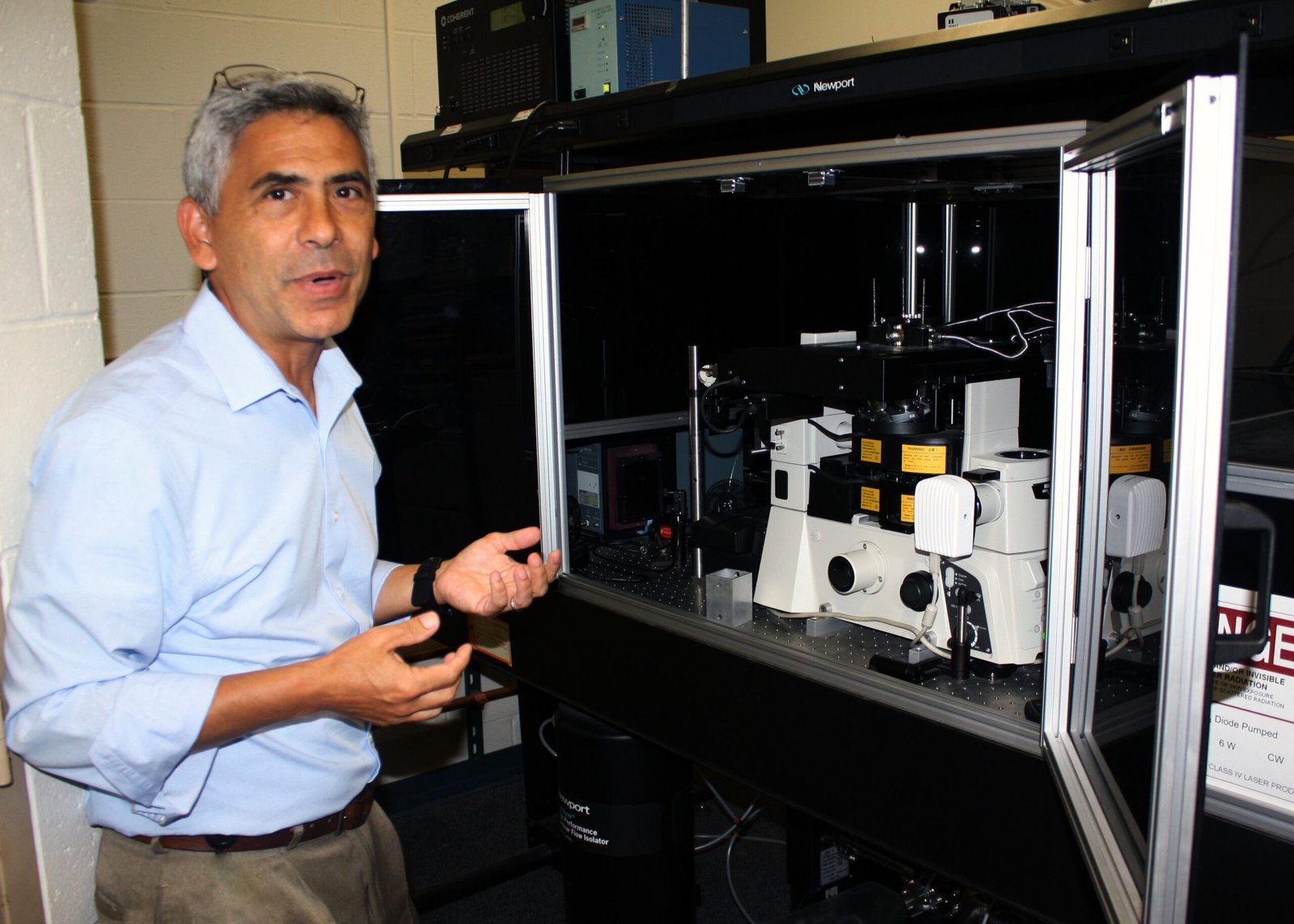

The pipeline looks simple on paper: propose, plan, produce, and probe. First, a model scores massive search spaces for promising structures – think millions of crystal blueprints where only a fraction should be stable. Next, a planner translates those blueprints into concrete recipes: temperatures, precursors, atmospheres, timings. Then robots mix, heat, and characterize, while an optimizer weighs results and steers the next trial toward higher odds.

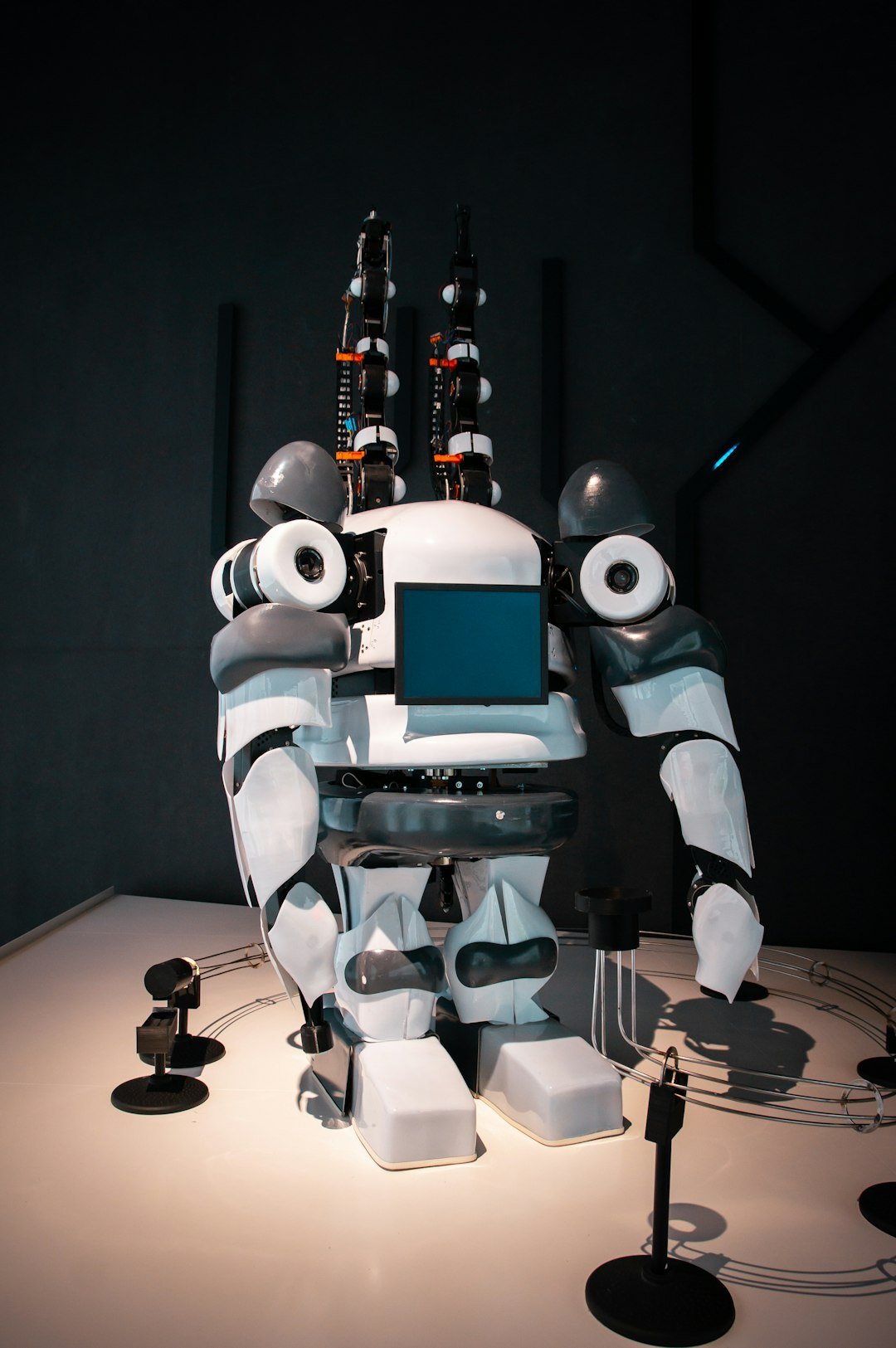

Increasingly, the “scientist” is embodied: humanoid or gantry robots that watch, measure, and adapt, adding dexterity to intelligence. One team even deployed a bipedal lab “supervisor” to learn human lab skills and keep the loop running smoothly – a sign that embodiment is coming for wet benches as surely as it came for factory floors. The point isn’t theatrics; it’s control and continuity, so the loop keeps learning when people go home.

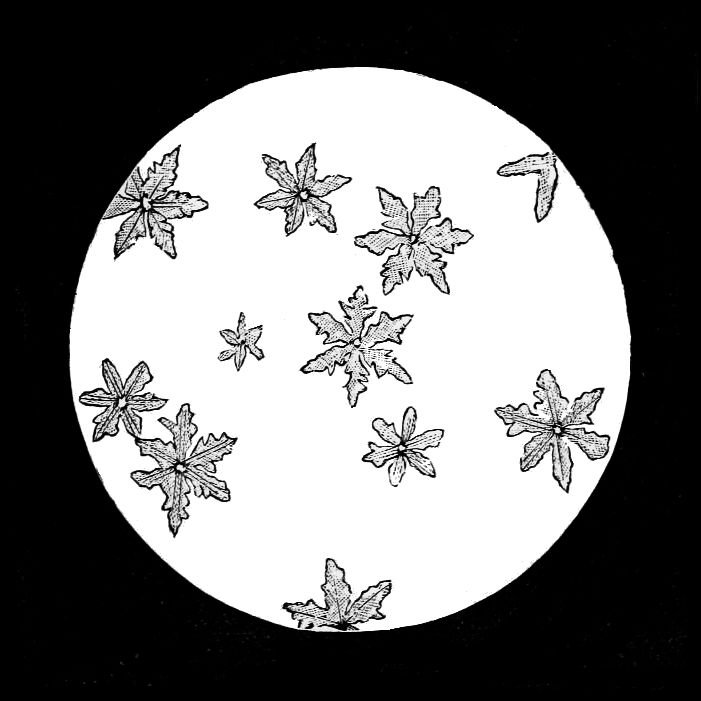

The Discovery at Hand

So what was discovered that no human could? Not a single headline material, but a burst of them – dozens of novel, laboratory‑made crystals validated in days, each selected by an AI that had already combed through a space too vast for any team to cover by hand. The achievement is scale coupled to synthesis: recipes that work, verified by X‑ray signatures, accumulating at a pace that resets expectations for what a quarter’s worth of research can deliver. On the margins, the loop even surfaced a previously unseen polymer polymorph in a separate electronic materials effort – exactly the kind of subtle structural surprise a human might skip past when the clock is ticking.

In the old rhythm, you might perfect one compound before a grant ran out. In the new rhythm, you explore a family tree and still have time to pick the most promising branch. That shift, not a single miracle material, is the discovery.

Why It Matters

Traditional discovery is a slog: limited reading, limited hands, and a hard ceiling on how many experiments can be run before fatigue blurs the data. The AI scientist breaks that ceiling by pairing breadth of search with disciplined iteration, raising the odds that rare, high‑value candidates won’t be missed. It’s not just faster; it’s different – less about intuition over a few guesses, more about systematic exploration over an entire landscape. That change can ripple into batteries, catalysis, photonics, and computing, where the right crystal or polymer can bend an industry’s cost curve.

– An AI model enumerated 2.2 million plausible crystals and flagged 381,000 as likely stable – orders of magnitude beyond any human scan. – An autonomous lab then turned a meaningful slice of those ideas into real, measurable materials in under three weeks, succeeding on about seven out of ten attempts. – In polymer electronics, an adaptive AI loop boosted a key device figure‑of‑merit by roughly one‑and‑a‑half times within a few dozen runs, while surfacing structural rules for future designs.

Global Perspectives

AI‑guided discovery isn’t confined to a single country or campus; from California to Europe to Asia, labs are wiring up self‑driving platforms and open‑sourcing the software guts that keep them coordinated. Efforts like AlabOS show the boring but vital backbone – workflow managers, resource schedulers, and experiment queues – that let autonomous labs scale beyond clever demos. At the policy level, global coalitions are pushing for shared guardrails, arguing that certain AI behaviors should be off‑limits even as scientific uses expand. The goal is to encourage safe acceleration, not a chaotic sprint.

There are also biosecurity wake‑up calls: researchers recently showed AI can help design working bacteriophages, highlighting how the same creativity that speeds up cures could complicate defenses. That’s a reminder that the “AI scientist” needs a matching “AI ethicist” and better screening, oversight, and rapid response. Progress and prudence have to advance together.

Limits and Skepticism

It’s tempting to declare the human scientist obsolete, but the evidence says otherwise. Independent assessments this year found that general‑purpose AIs still stumble at crucial research steps – especially experimental design, error analysis, and judging when a surprising result is too good to be true. Models hallucinate, miss confounders, and can lock onto spurious patterns if left unmonitored. The loop works best when people set goals, review data quality, and ask the uncomfortable questions only experience brings.

Even the headline achievements carry caveats: predictions outpace synthesis; many candidates will never matter outside a paper; and data hunger can bias models toward what’s already well‑measured. In other words, the AI scientist is a force multiplier, not a magic wand. The better we understand its blind spots, the more remarkable its strengths become.

The Future Landscape

Next‑generation systems are turning the loop into a team sport for machines: multiple agents brainstorming ideas, critiquing plans, and proposing validation steps before a robot touches a sample. Expect that to mesh with cheaper instrumentation and more precise automation, spreading self‑driving labs into energy storage, separations, and environmental remediation. If the last cycle was about proving that AI can discover, the next one is about deploying that ability where it changes supply chains, not just conference slides.

The challenges are clear: trustworthy data sharing, fair credit when code sparks a breakthrough, and global norms that encourage open science without opening doors to misuse. Solve those, and the phrase “impossible to find” will start sounding quaint. The race will be to ask better questions, faster.

Conclusion

If this future excites you, there are simple ways to help steer it well. Support open materials and biology datasets, because good data is the fuel that keeps discovery honest and reproducible. Back university makerspaces and community labs that teach the next generation to work safely with automation, and encourage journals to require full protocol and code releases. Ask your representatives to fund transparent, auditable autonomous labs – and to pair that funding with clear biosecurity and lab‑safety standards. Finally, if you work in industry, pilot an AI‑guided experiment alongside a conventional one and compare results; let evidence, not hype, set your roadmap.

Suhail Ahmed is a passionate digital professional and nature enthusiast with over 8 years of experience in content strategy, SEO, web development, and digital operations. Alongside his freelance journey, Suhail actively contributes to nature and wildlife platforms like Discover Wildlife, where he channels his curiosity for the planet into engaging, educational storytelling.

With a strong background in managing digital ecosystems — from ecommerce stores and WordPress websites to social media and automation — Suhail merges technical precision with creative insight. His content reflects a rare balance: SEO-friendly yet deeply human, data-informed yet emotionally resonant.

Driven by a love for discovery and storytelling, Suhail believes in using digital platforms to amplify causes that matter — especially those protecting Earth’s biodiversity and inspiring sustainable living. Whether he’s managing online projects or crafting wildlife content, his goal remains the same: to inform, inspire, and leave a positive digital footprint.