Imagine a world where a self-driving car swerves to avoid an accident but instead causes harm to an innocent pedestrian. Or a medical AI wrongly diagnoses a patient, leading to dangerous consequences. These are not scenes from a distant tomorrow—they’re real dilemmas we face today, as artificial intelligence becomes deeply woven into our daily lives. As the line between human and machine decision-making blurs, a burning question emerges: When AI goes wrong, who should be held accountable? This question isn’t just technical or legal—it’s profoundly human, stirring up emotions of fear, hope, and responsibility that touch us all.

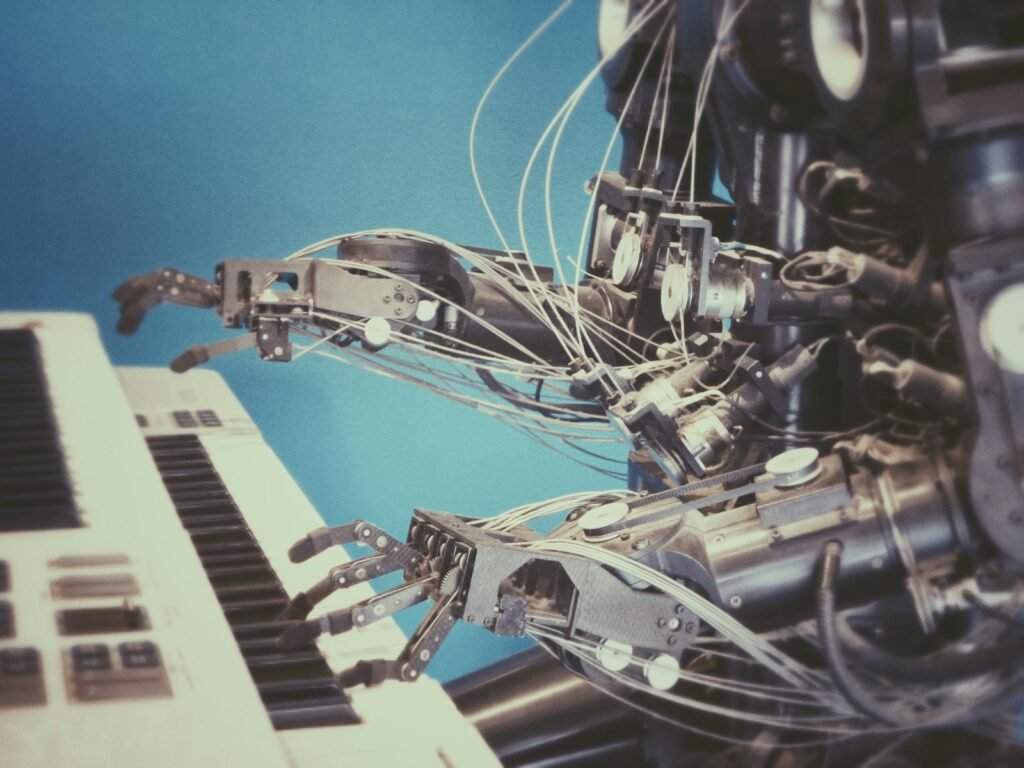

The Rise of Machine Decision-Making

Artificial intelligence is no longer confined to science fiction; it’s steering cars, predicting diseases, deciding who gets a loan, or even helping judges in courtrooms. These systems are built to analyze huge amounts of data and make decisions faster than any person could. The promise is dazzling: fewer human errors, more efficient processes, and even lives saved. However, the more we rely on AI, the more we have to face its fallibility. Machine decisions, while often impressive, are not infallible. They can make surprising mistakes, sometimes with devastating effects. As machines take on more responsibility, the stakes rise higher than ever before.

Understanding AI Mistakes: More Than Glitches

When AI makes a mistake, it’s rarely as simple as a bug in the code. Sometimes, these errors happen because the data used to train the AI was flawed or biased. Other times, the machine encounters a situation it was never prepared for. For example, an image recognition AI might misidentify a stop sign if it’s partially covered by graffiti—something it never saw in its training data. These mistakes can be subtle or dramatic, but they always raise the question: is the machine truly at fault, or is it the humans who designed and trained it?

Who Designs and Trains the Machines?

AI systems do not emerge from thin air. Behind every algorithm is a team of engineers, data scientists, and company executives making thousands of decisions. They choose what data to use, what goals to optimize for, and how much risk is acceptable. When a machine makes a mistake, it’s often because of these early choices. For instance, if a bank’s AI unfairly denies loans to certain applicants, it may reflect hidden biases in the training data—a human oversight, not a machine’s intention. The people who create and deploy AI systems play a pivotal role in shaping their behavior, for better or worse.

The Role of Companies: Corporate Accountability

Corporations are the main drivers behind most modern AI systems. They have enormous power—and thus, enormous responsibility. When a self-driving car causes an accident, or a health app misdiagnoses a condition, the companies behind them can face lawsuits, reputational damage, and regulatory scrutiny. Some firms try to limit their liability by warning users about the risks or forcing them to accept terms of service, but public pressure is mounting for stronger accountability. After all, if a company profits from an AI’s decisions, shouldn’t it also bear the costs when things go wrong?

The Legal Labyrinth: Laws Struggling to Keep Up

Our legal systems are racing to catch up with the fast pace of AI development. Traditional laws often assume that a human makes every decision, but with AI, that assumption crumbles. Courts now face tough questions: Can a machine be liable for harm? Should the creators, the users, or the companies be held responsible? Different countries are experimenting with new regulations, such as the European Union’s AI Act, which tries to balance innovation with safety. Still, there’s no global consensus, and the law often lags behind the technology it seeks to govern.

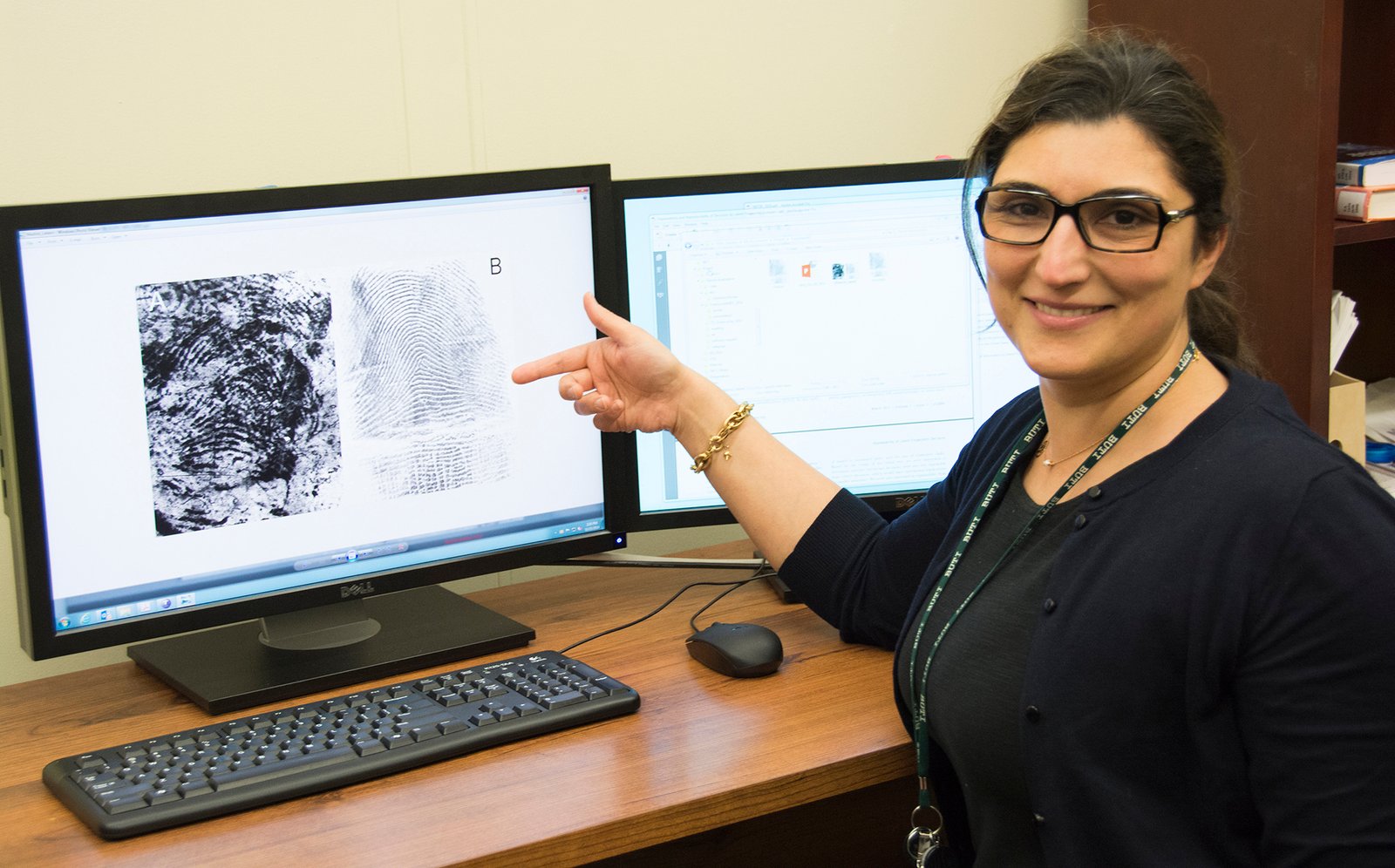

The “Black Box” Problem: When No One Knows Why

One of the most challenging issues with modern AI—especially deep learning systems—is that even their creators don’t always understand how they reach their decisions. These so-called “black box” models process information in complex ways that defy simple explanation. Imagine a judge asking an AI to explain why it denied bail to a defendant, only to receive an answer no one can decipher. This lack of transparency makes assigning responsibility incredibly difficult. Without clear explanations, how can anyone be held accountable?

Human Oversight: The Last Line of Defense

Some experts argue that humans must always have the final say in critical decisions made by AI. This is known as “human-in-the-loop” oversight. For example, airlines require pilots to monitor autopilot systems and intervene if something goes wrong. In medicine, doctors review AI-generated diagnoses before acting on them. This approach helps catch mistakes before they cause harm—but it’s not foolproof. Human overseers can become over-reliant on AI, leading to complacency or missed errors. The balance between trusting machines and keeping humans in control is a delicate one.

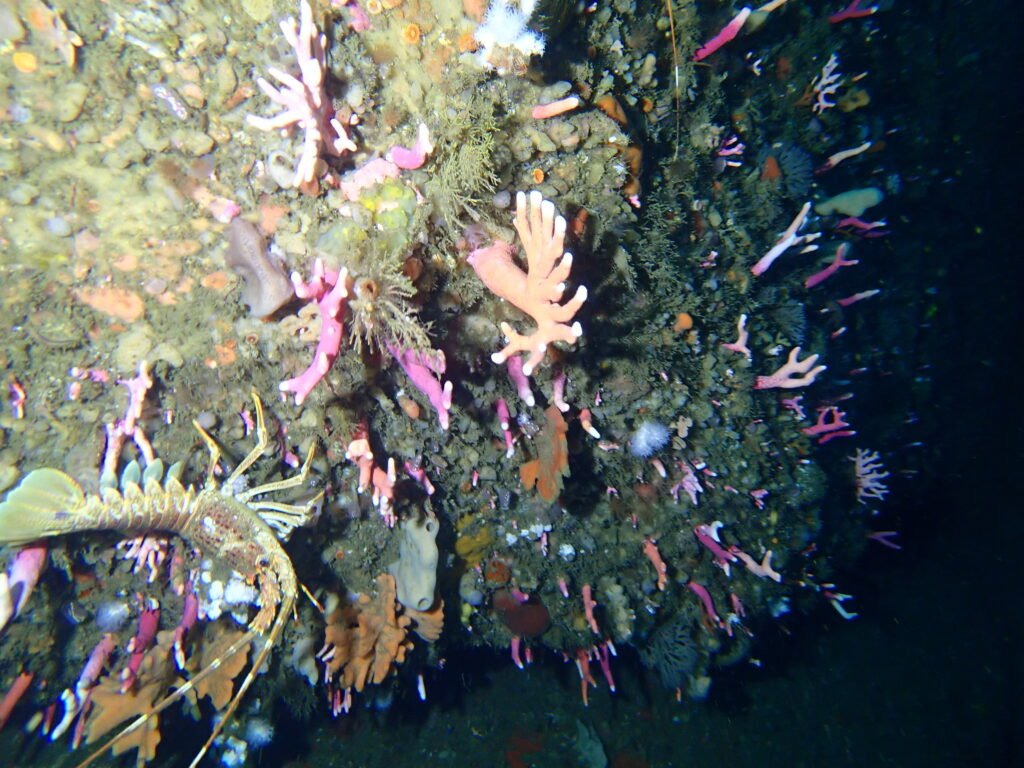

Bias and Discrimination: When AI Amplifies Inequality

A shocking truth about AI is that it can amplify existing biases and discrimination. If an AI is trained on data that reflects societal prejudices, it can make unfair decisions—such as denying jobs to certain groups or misidentifying people of color in facial recognition systems. These mistakes don’t just harm individuals; they erode public trust in technology as a whole. Addressing bias in AI is not only a technical challenge but an ethical imperative. It forces us to confront deep questions about justice, fairness, and equality in a world increasingly run by machines.

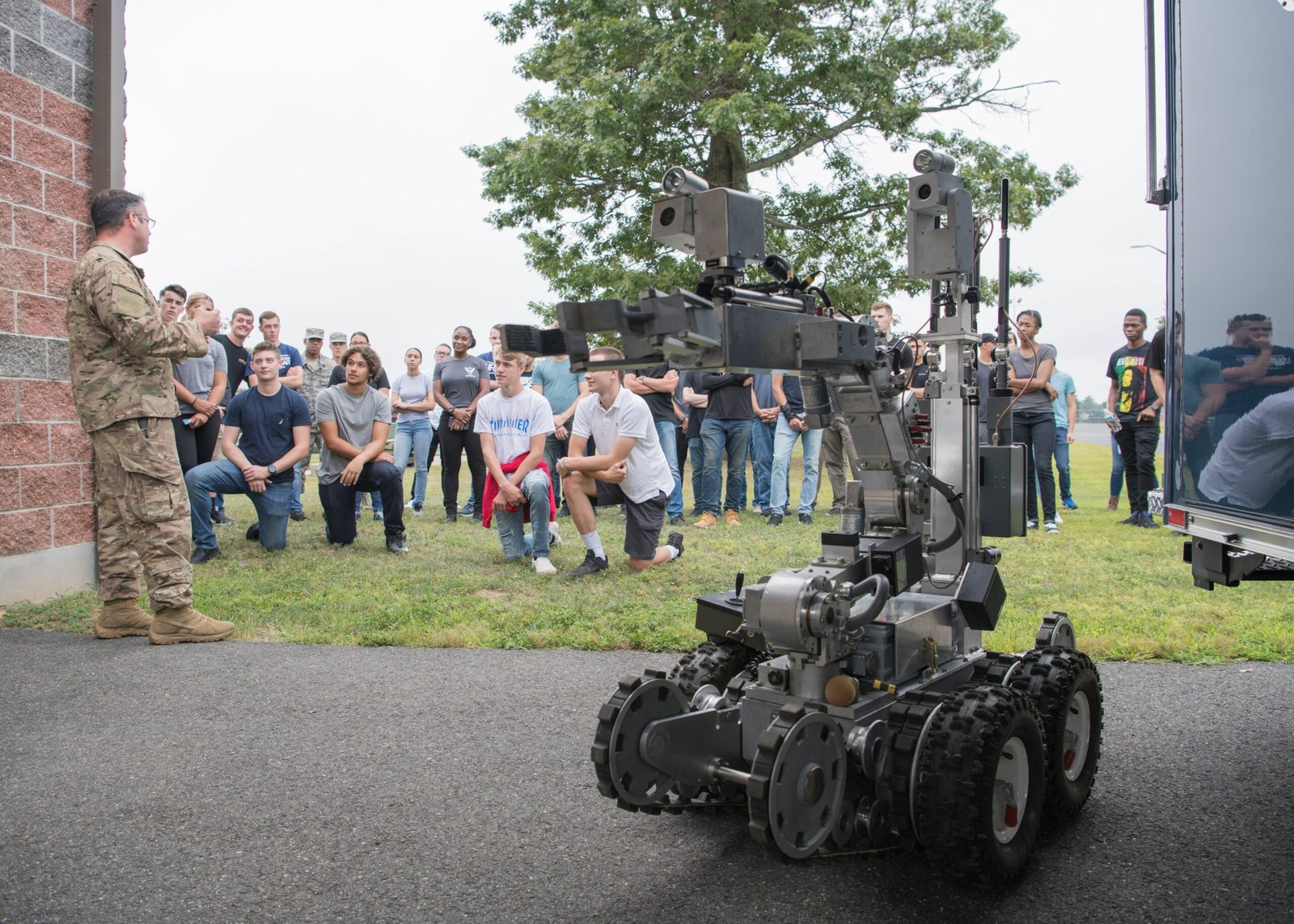

The Ethics of Autonomous Weapons

Perhaps the most chilling example of AI accountability is in the realm of autonomous weapons—machines that can decide, without human input, whom to target and kill. International debates rage about whether such systems should even exist. If a drone mistakenly attacks civilians, who should be held accountable—the programmer, the commander, or the machine itself? The possibility of machines making life-and-death decisions raises moral questions unlike any we have faced before, pushing the boundaries of what it means to be responsible.

Regulating the Future: The Call for Global Standards

As AI becomes more powerful and widespread, the call for regulation grows louder. Industry leaders, governments, and advocacy groups are grappling with how to create rules that protect people without stifling innovation. Some push for transparency requirements, ethical audits, and strict testing before deployment. Others argue for international agreements—like those for nuclear weapons—to prevent the misuse of AI on a global scale. The challenge is finding solutions that are both effective and adaptable to rapidly changing technology.

Personal Responsibility: Users in the Spotlight

While much attention is focused on companies and developers, users of AI also bear some responsibility. Whether it’s a doctor relying on an AI tool, a driver using an autopilot feature, or a judge consulting sentencing software, individuals must use these systems wisely and ethically. Blind trust in machines can lead to dangerous outcomes. Education and awareness are critical so that users understand both the power and the limitations of the tools at their fingertips.

The Human Cost: Stories Behind the Mistakes

Behind every AI mistake, there are real people—families affected by accidents, patients harmed by wrong diagnoses, individuals denied opportunities. Their stories remind us that accountability isn’t just about laws or technology but about protecting human dignity and well-being. As we marvel at the wonders of artificial intelligence, we must not lose sight of those who pay the price when things go wrong.

Building Trust: The Path Forward

For AI to truly benefit society, people need to trust that it will be used responsibly. This means more than just clever algorithms—it requires transparency, robust oversight, and a willingness to admit mistakes. As a famous ethicist once said, “Technology is best when it brings people together.” Building a future where humans and machines can safely coexist is not just a technical challenge—it’s a moral one. Who do you believe should be accountable when a machine makes a mistake?