Imagine a world where machines not only obey commands but also think, learn, and adapt—where the line between imagination and intelligence blurs with each click and keystroke. The story of artificial intelligence (AI) is not just a tale of technology; it’s a chronicle of human curiosity, ambition, and the relentless drive to understand our own minds. From the earliest dreams of mechanical brains to the astonishing breakthroughs of modern learning algorithms, the journey of AI is filled with moments of wonder, skepticism, and awe. Let’s step into this extraordinary saga, where logic meets creativity and machines awaken to possibility.

The Seeds of Imagination: Early Dreams of Thinking Machines

Long before computers existed, philosophers and inventors dreamed of artificial minds. Ancient myths told of automata—mechanical beings that could move, act, and even converse. These stories, from the talking statues of Egypt to the legendary golems of Jewish folklore, reveal humanity’s age-old fascination with creating life from lifelessness. By the 17th century, visionaries like René Descartes began to ponder whether thought could be reduced to mechanical rules, sparking debates that would echo through centuries. It was this blend of fantasy and reason that first planted the seeds for what we now call artificial intelligence.

The Age of Logic: Alan Turing and the Universal Machine

The dawn of the 20th century brought a seismic shift. Alan Turing, a British mathematician, proposed a radical idea: if human reasoning could be described by rules, then so could a machine’s. His invention, the Turing Machine, was a simple yet powerful abstract device that could simulate any logical process. Turing’s 1950 paper, “Computing Machinery and Intelligence,” boldly asked, “Can machines think?” He introduced the Turing Test—a challenge to see if a machine could imitate human responses well enough to fool an interrogator. This concept electrified scientists and set the stage for AI’s first real steps.

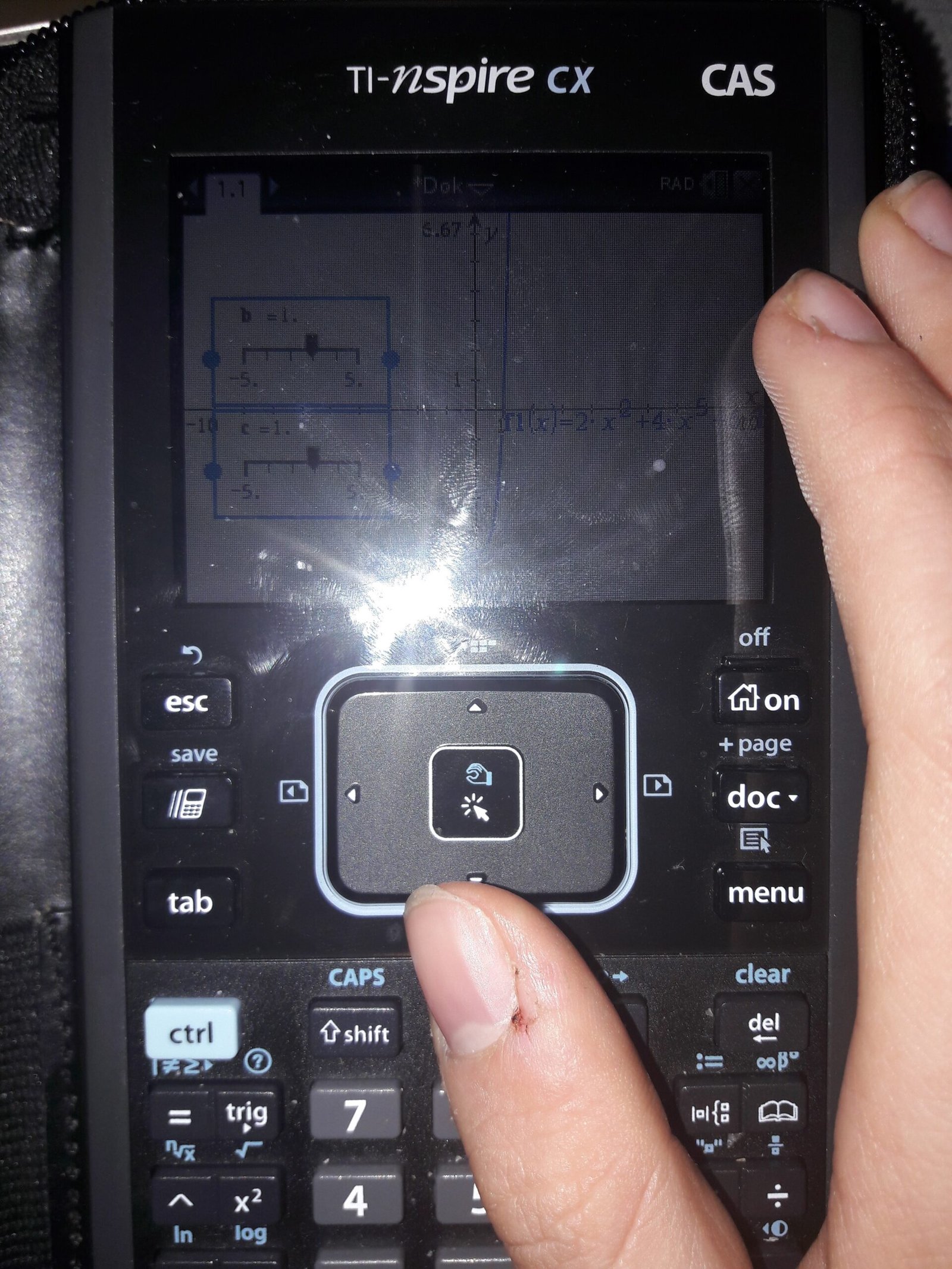

The First Logic Machines: From Chess to Language

By the 1950s and 60s, researchers began building the first programs that could perform tasks once thought uniquely human. Early machines played chess, solved mathematical puzzles, and even attempted to translate languages. The Logic Theorist, created by Allen Newell and Herbert Simon, could prove mathematical theorems—astonishing proof that machines could reason. These programs relied on symbolic logic, manipulating abstract symbols according to strict rules, much like following a recipe. It was a thrilling time, as scientists believed that understanding and intelligence could be boiled down to logic and rules.

The Rise and Fall of Early AI Hopes

Excitement soared as AI systems conquered games and mathematical problems. Some researchers predicted that human-level AI was just around the corner. Yet, reality proved stubborn. Early AI struggled with the unpredictability of the real world. While a program could beat a novice at chess, it couldn’t recognize a cat or understand everyday language. These disappointments led to the first “AI winter”—a period of waning enthusiasm and funding. Still, these setbacks forced researchers to question their assumptions and search for better approaches.

The Power of Learning: Birth of Machine Learning

A stunning insight emerged: intelligence isn’t just about following fixed rules—it’s about learning from experience. In the late 1950s, Frank Rosenblatt introduced the perceptron, a primitive neural network designed to mimic the way the human brain processes information. The perceptron learned to recognize patterns by adjusting its internal weights, much like neurons in the brain strengthen connections through repeated use. Though limited by the era’s technology, this idea planted the seeds for machine learning—a field focused on building systems that improve as they encounter more data.

From Symbolic Logic to Neural Networks

The contrast between rule-based AI and learning machines soon became a defining battle. Symbolic AI, with its clear rules and logic, excelled at tasks requiring precise reasoning. But neural networks, inspired by biology, promised to tackle fuzzier, more intuitive problems—like recognizing voices or understanding images. Early neural networks struggled due to limited computing power and theoretical roadblocks. Critics dismissed them, but a small group of enthusiasts pushed forward, convinced that learning systems held untapped potential. Their perseverance would one day pay off in spectacular ways.

The Data Explosion: Fuel for Modern AI

In the 21st century, the world was suddenly awash in digital data—photos, texts, videos, and sensor readings. This explosion of information became the lifeblood of AI. Algorithms could now learn from billions of examples, finding patterns far too subtle for any human to program by hand. Advances in hardware, especially powerful graphics processing units (GPUs), made it possible to train deep neural networks with thousands of layers. For the first time, computers could recognize faces, translate languages, and even generate art. The age of “big data” had arrived, catapulting AI to new heights.

Deep Learning: Machines That See, Hear, and Create

Deep learning, a breakthrough form of machine learning, redefined what machines could do. Inspired by the human brain’s layered structure, deep networks process raw input—like pixels or sounds—through many interconnected layers, each learning increasingly complex features. This approach led to astonishing achievements: AI systems beat world champions at Go, composed convincing music, and diagnosed diseases from medical images. Deep learning’s success wasn’t just technical—it was emotional. People marveled at machines that could paint, write poetry, or hold a conversation, blurring the boundary between tool and creator.

AI in the Real World: From Labs to Everyday Life

Today, AI is woven into the fabric of daily life. Smart assistants answer questions, recommend music, and control home devices. In medicine, AI helps doctors detect cancer earlier and match treatments to patients’ unique genetics. Self-driving cars navigate city streets, and translation apps bridge language barriers. Even in nature, AI-powered drones track endangered species and monitor forests for signs of fire. The technology’s reach is astonishing, touching everything from entertainment to environmental protection. Each advance brings new wonders—and new ethical challenges.

The Ethical Frontier: Risks, Hopes, and Human Values

With great power comes great responsibility. As AI’s abilities grow, so do concerns about privacy, fairness, and bias. Machines trained on flawed data can reinforce stereotypes or make unfair decisions. Autonomous weapons and surveillance tools raise chilling questions about control and accountability. At the same time, AI offers hope—tools to fight disease, reduce poverty, and confront climate change. The future of AI depends not just on algorithms, but on the values we embed within them. The conversation is no longer about what machines can do, but what they should do.

Looking Ahead: The Endless Quest for Intelligence

The journey of artificial intelligence is far from over. From the first dreams of mechanical minds to today’s learning algorithms, AI has mirrored our own search for understanding. Each breakthrough sparks new questions and deeper mysteries. Will machines ever truly think, feel, or understand as we do? How can we build AI that reflects the best of humanity, not just its flaws? The path ahead is as thrilling as it is uncertain, inviting us all to participate in the next chapter of this remarkable story.