What if the smartest machines we’ve ever created can’t tell the difference between half the world’s population? Imagine a future where artificial intelligence systems, entrusted with making crucial decisions about jobs, healthcare, and countless aspects of daily life, systematically misunderstand or completely ignore entire communities simply because they never learned to see them properly. This isn’t science fiction—it’s happening right now, and we’re standing at a crossroads that could determine whether AI becomes humanity’s greatest equalizer or its most sophisticated form of discrimination.

The Hidden Crisis in AI Development

Right now, as you’re reading this, artificial intelligence systems are making thousands of decisions that affect real people’s lives. They’re deciding who gets hired, who receives medical diagnoses, and whose voices get heard online. But here’s the shocking reality: 44 per cent of them showed gender bias, and 25 per cent exhibited both gender and racial bias. These aren’t just numbers on a research paper—they represent millions of people whose opportunities and well-being hang in the balance. What’s even more alarming is that “AI is mostly developed by men and trained on datasets that are primarily based on men,” creating a feedback loop that amplifies existing inequalities. When women try to use some AI-powered medical diagnostic systems, they receive inaccurate answers because the AI doesn’t understand how symptoms might present differently in women. The problem runs so deep that it’s literally built into the foundation of how these systems learn.

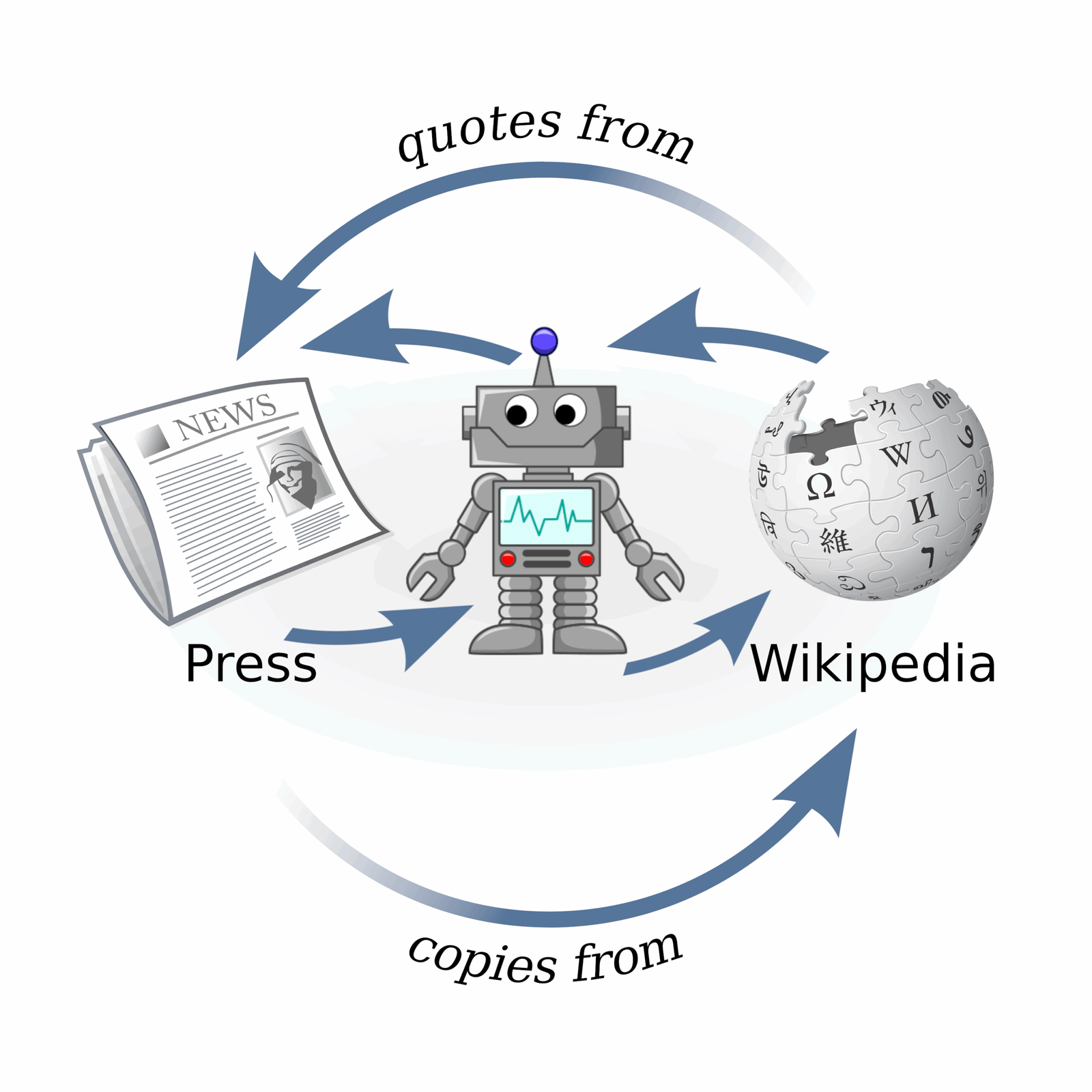

When Machines Learn Our Worst Habits

Think of AI as an incredibly powerful but naive student that absorbs everything it’s taught without questioning whether those lessons are fair or accurate. “AI systems, learning from data filled with stereotypes, often reflect and reinforce gender biases,” explains leading expert Zinnya del Villar. Picture this scenario: an AI system learns to make hiring decisions by studying thousands of resumes from the past decade. If most of those examples show men in leadership roles and women in support positions, the AI assumes that’s the natural order of things. If most of those examples carry conscious or unconscious bias – for example, showing men as scientists and women as nurses – the AI may interpret that men and women are better suited for certain roles and make biased decisions when filtering applications. It’s like teaching a child about the world using only magazines from the 1950s and then expecting them to understand modern equality.

The Real-World Consequences We Can’t Ignore

The impact of biased AI goes far beyond theoretical concerns—it’s actively reshaping people’s lives in troubling ways. “In critical areas like healthcare, AI may focus more on male symptoms, leading to misdiagnoses or inadequate treatment for women,” warns del Villar. Meanwhile, “Voice assistants defaulting to female voices reinforce stereotypes that women are suited for service roles, and language models like GPT and BERT often associate jobs like “nurse” with women and “scientist” with men.” Amazon learned this lesson the hard way when they had to scrap their AI recruiting tool because it was trained on resumes from the past decade, predominantly written by male applicants. Consequently, the AI model downgraded resumes that included the word “women” or mentioned all-women’s colleges. These aren’t glitches in the system—they’re features that emerge when we don’t actively design for inclusivity.

The Data Desert: Where Women Disappear

One of the most fundamental problems facing AI development is what researchers call the “data gap”—the gender digital divide creates a data gap that is reflected in the gender bias in AI. Consider this startling fact: in low-income countries, only 20 per cent are connected to the internet among women. When entire populations are missing from the digital landscape, AI systems literally can’t learn about their experiences, needs, or perspectives. It’s like trying to understand global cuisine by only studying recipes from one neighborhood. In a world where nearly 2.6 billion people remain offline, the datasets underpinning AI systems don’t yet reflect the full diversity of human experience. There are over 7,000 languages spoken in the world, yet most AI chatbots are trained on around 100 of them. This isn’t just about representation—it’s about creating technology that actually works for everyone.

Beyond Binary: Understanding the Full Spectrum

Gender diversity in AI isn’t just about ensuring equal representation of men and women—it’s about recognizing and accommodating the full spectrum of human gender identity and expression. Traditional AI systems often operate on binary assumptions that don’t reflect the complexity of real human experience. The pervasive presence and wide-ranging variety of artificial intelligence (AI) systems underscore the necessity for inclusivity and diversity in their design and implementation, to effectively address critical issues of fairness, trust, bias, and transparency. When AI systems are designed with narrow definitions of gender, they exclude non-binary, transgender, and gender-fluid individuals from their understanding of the world. This exclusion isn’t just unfair—it makes the AI less intelligent and less capable of serving diverse human needs. The goal isn’t to overcomplicate systems, but to build them with the flexibility and nuance that human identity actually requires.

The Training Revolution: Teaching Machines to See Everyone

The solution to biased AI isn’t just throwing more data at the problem—it’s about fundamentally changing how we approach training these systems. “To reduce gender bias in AI, it’s crucial that the data used to train AI systems is diverse and represents all genders, races, and communities,” emphasizes del Villar. Implement bias-checking algorithms and ensure the training dataset is diverse. In other words, the more accurate and diverse the training dataset is, the better your model can perform. But diversity isn’t just about numbers—it’s about actively seeking out and including perspectives that have been historically marginalized. “We need to stop thinking that if you just collect a ton of raw data, that is going to get you somewhere. We need to be very careful about how we design datasets in the first place,” explains MIT researcher Xavier Boix. It’s like the difference between randomly grabbing ingredients for a meal and carefully selecting each component to create something balanced and nutritious.

The Human Touch: Why Diverse Teams Matter

You can’t build inclusive AI without inclusive teams. “Additionally, AI systems should be created by diverse development teams made up of people from different genders, races, and cultural backgrounds. This helps bring different perspectives into the process and reduces blind spots that can lead to biased AI systems.” The statistics are sobering: women account for just 8% of Chief Technology Officers (CTOs) in the US, and a similar figure in financial services organisations globally. CTOs’ decisions on the use of AI are shaping tomorrow’s workplace – and input from women is lacking, meaning less account is taken of their views, needs and experiences. It’s like trying to design a house without asking half the people who will live there what they need. When there is a lack of diversity in the workforce, it is bound to have an impact. There’s a risk that systems can mirror existing societal biases related to gender. Building diverse teams isn’t just about fairness—it’s about building better, smarter AI.

Breaking the Bias Loop: Detection and Prevention

Identifying bias in AI systems requires sophisticated tools and ongoing vigilance. Addressing bias in AI requires a multifaceted approach, including: Diverse and Representative Data: Ensuring training datasets include a wide range of perspectives and demographics. Bias Detection Tools: Using fairness metrics, adversarial testing, and explainable AI techniques to identify and rectify bias. Continuous Monitoring: Regularly auditing AI systems after deployment to detect emerging biases and improve fairness. Think of it like regular health checkups for AI systems—you can’t just build them and forget about them. Monitor the model over time against biases. The outcome of ML algorithms can change as they learn or as training data changes. The most insidious part about AI bias is that it often emerges gradually, as systems learn from new data or as societal contexts shift. Even if a model appears unbiased during training, biases can still emerge when deployed in real-world applications. If the system is not tested with diverse inputs or monitored for bias after deployment, it can lead to unintended discrimination or exclusion. Addressing bias requires a continuous feedback loop, where AI models are regularly evaluated and updated based on real-world interactions and new data.

Success Stories: When AI Gets It Right

Despite the challenges, there are inspiring examples of AI being used to advance gender equality rather than hinder it. In finance, AI is helping overcome long-standing gender biases in credit scoring, as seen with companies like Zest AI, which use machine learning to make fairer credit assessments. AI is also improving access to microfinance services for women entrepreneurs to access loans and financial services, particularly in underserved areas. Educational platforms are using AI to reveal the disparity in enrollment rates between men and women on platforms such as Coursera and edX, and uncovered biases in textbooks, helping educators revise learning materials to be more inclusive. AI, with the right guardrails on development and accountability, can help minimise human biases that can arise in recruitment, promotion, and other talent management decisions. Organisations are using AI to design their people systems in ways that help minimise the impact of these biases that have long held women back. These successes show that when we intentionally design AI for equality, it can become a powerful force for positive change.

The Transparency Challenge: Opening the Black Box

One of the biggest obstacles to creating fair AI is the “black box” problem—when AI systems make decisions in ways that even their creators don’t fully understand. Like any data-driven tool, AI algorithms depend on the quality of data used to train the AI model. All too often, AI models effectively operate as a “black box” whose decisions are not widely understood. This makes bias much harder to spot and address, and reduces trust more broadly. Imagine if a doctor prescribed medication but couldn’t explain why, or if a judge made a ruling without providing reasoning. Encourage transparency in AI decision-making to help users understand potential biases is crucial for building systems that people can trust and verify. Helping people understand how AI works and the potential for bias can empower them to recognize and prevent biased systems, and keep human oversight on decision-making processes. Transparency isn’t just about technical documentation—it’s about making AI understandable and accountable to the people whose lives it affects.

Global Initiatives: A Worldwide Movement

The push for gender-inclusive AI is gaining momentum across the globe, with organizations and governments recognizing the urgency of this challenge. UN Women AI School, an innovative learning program designed to equip gender equality advocates with the knowledge and skills to harness AI for social change, advocacy, and organizational transformation represents a major step forward in building capacity for inclusive AI development. The Harvard Radcliffe Institute conference will explore present and future challenges and opportunities posed by the intersections of gender and artificial intelligence (AI). Leading computer scientists, engineers, policy makers, ethicists, artists, and representatives from the private sector will investigate how gender may affect—positively and negatively—the creation, operation, and impact of cutting-edge technologies using AI. These initiatives create communities of practice where researchers, activists, and technologists can collaborate on solutions. AI has the potential to both increase and reduce social inequality. To ensure AI generates more social good than harm, the people affected by this technology must be engaged in its development and deployment. Partnership on AI’s Inclusive Research and Design Program provides practical resources for how AI can be created with communities — not just for them.

The Economic Imperative: Why Inclusion Pays

Building gender-inclusive AI isn’t just morally right—it’s economically smart. The rapid progress of generative AI could be a turning point as the scarcity of AI talent may entice employers to expand their talent pool by including groups that have previously been overlooked, like women. Rather than missing out on half the available talent, this shift will enable employers to build early mover advantages around AI. When AI systems work effectively for everyone, they create larger markets and more opportunities for innovation. Artificial intelligence may be driving the Fourth Industrial Revolution, but it needs to be democratized so everyone can access and use it. The question facing developers, business leaders and politicians is: How do we ensure that the growth of AI doesn’t leave people behind and is truly inclusive? Companies that invest in inclusive AI development today are positioning themselves to serve broader markets and build more sustainable businesses tomorrow. With the potential to contribute $500 billion to the economy by 2025, AI stands to revolutionize key sectors such as agriculture, healthcare, urban planning and manufacturing. However, realizing this promise requires not only technological advancements but also robust frameworks and equitable access to resources.

Building the Infrastructure for Inclusive AI

Creating truly inclusive AI requires more than good intentions—it demands fundamental changes to how we build and deploy these systems. AI must be built on inclusive, representative data — and that starts with access. Modernizing data infrastructure is essential for scaling AI responsibly and securely. Inclusive AI requires collaboration, governance and a long-term commitment to ethical design. This means investing in digital infrastructure that connects underrepresented communities, developing standards for data collection that prioritize diversity, and creating governance frameworks that hold AI developers accountable for inclusive outcomes. Additionally, it is helpful to track data lineage, meaning monitoring changes in datasets due to new data collection or removal of harmful samples. Knowing the exact data a model was trained on is vital for tracing outputs. Both help identify problematic samples that can be removed or amended. Adhering to these practices can enhance AI system transparency, helping to combat bias, track information leaks, and detect security breaches. It’s like building the plumbing and electrical systems for a house—invisible but essential infrastructure that everything else depends on.

Education: Training the Next Generation

Perhaps the most important investment we can make is in education—both for current AI developers and for the next generation of technologists. We found that of 11 courses we reviewed, only five included sections on bias in datasets, and only two contained any significant discussion of bias. I’ve heard lots of stories where people self-study based on these online courses, but at the same time, given how influential they are, how impactful they are, we need to really double down on requiring them to teach the right skillsets, as more and more people are drawn to this AI multiverse. Teaching inclusive AI development isn’t just about adding a module to existing computer science curricula—it requires rethinking how we approach technology education from the ground up. Despite the recent efforts in developing AI curricula and guiding frameworks in AI education, the educational opportunities often do not provide equally engaging and inclusive learning experiences for all learners. To promote equality and equity in society and increase competitiveness in the AI workforce, it is essential to broaden participation in AI education. However, a framework that guides teachers and learning designers in designing inclusive learning opportunities tailored for AI education is lacking. We need programs that attract diverse students, curriculum that emphasizes ethical considerations from day one, and learning environments that model the inclusivity we want to see in AI systems.

The Measurement Challenge: How Do We Know We’re Succeeding?

Creating inclusive AI requires robust methods for measuring progress and identifying areas that need improvement. Examine the training dataset for whether it is representative and large enough to prevent common biases such as sampling bias. Conduct subpopulation analysis that involves calculating model metrics for specific groups in the dataset. This can help determine if the model performance is identical across subpopulations. But measurement goes beyond technical metrics—it requires understanding how AI systems affect different communities in practice. “Context is everything,” said Schwartz, principal investigator for AI bias and one of the report’s authors. “AI systems do not operate in isolation. They help people make decisions that directly affect other people’s lives. If we are to develop trustworthy AI systems, we need to consider all the factors that can chip away at the public’s trust in AI”. This means going beyond laboratory testing to understand how AI performs in real-world contexts, gathering feedback from affected communities, and being willing to make changes when systems don’t live up to their inclusive goals.