Imagine waking up to a world where machines no longer just follow our instructions, but instead, they evolve—changing and improving themselves in ways we never predicted. The idea sounds thrilling, perhaps even a little terrifying. Self-improving artificial intelligence (AI) is no longer just a concept from science fiction; it’s a topic that has scientists, ethicists, and dreamers hotly debating what comes next. Could AI truly evolve on its own, stepping beyond the boundaries humans have set? This question stirs both wonder and unease, promising a future that could be astonishingly brilliant—or wildly unpredictable.

Understanding the Basics: What Is Self-Improving AI?

Self-improving AI refers to systems with the capability to modify their own code, algorithms, or architecture without direct human intervention. Instead of relying solely on programmers for updates, these systems could, in theory, identify their own weaknesses and design solutions to overcome them. This concept is inspired by the way living organisms adapt and evolve over time, but in this case, the “organism” is a digital mind. Early forms of this can be seen in machine learning algorithms that tweak their parameters to perform better at specific tasks, but true self-improvement would go far beyond just fine-tuning. The ultimate vision is an AI that can rewrite its own rules, possibly leading to leaps in intelligence that surprise even its creators. This tantalizing possibility sits at the heart of a heated scientific debate.

The Roots of the Debate: Can Machines Truly Evolve?

The question of whether AI can really evolve on its own divides experts. On one side, some believe that with the right programming and enough computational power, AI will inevitably reach a point where it can improve itself in ways we can’t imagine. They point to examples in nature, like how bacteria develop resistance to antibiotics, as proof that systems can adapt and grow more capable over time. Others argue that evolution requires a level of creativity and resourcefulness that machines may never possess. Unlike biological life, which adapts through a messy process of mutation and natural selection, computers follow strict, logical paths. Can a machine truly innovate, or will it always be bound by the limits of its original design? This is where the debate becomes both technical and philosophical.

Machine Learning: The First Step Toward AI Evolution

Machine learning is often described as the “seed” from which self-improving AI might grow. In machine learning, algorithms learn from data, gradually getting better at tasks like recognizing faces or translating languages. For example, a spam filter can learn to catch new types of junk mail by analyzing patterns in the messages it receives. While impressive, this is still a far cry from true self-improvement. The system isn’t rewriting its own rules; it’s just adjusting weights within a pre-set framework. However, the rapid progress in this field has led many to wonder: If we can create algorithms that learn from experience, what’s to stop us from building ones that can redesign themselves entirely?

Autonomous Code Generation: A Glimpse of Self-Evolution

Recent advances in AI have given us tools like code-generating models, capable of writing simple computer programs based on human prompts. These systems can build new solutions, fix bugs, and even optimize code for efficiency. For example, some AI models can review thousands of lines of programming and suggest improvements in seconds—a task that would take a human developer much longer. While these models still require human oversight, they hint at a future where machines could autonomously improve their own software. Imagine a robot that not only learns to walk but also rewrites its control algorithms to run faster or jump higher, all without a human engineer’s help.

The Limits of Current Technology

Despite these impressive advances, today’s AI systems still face significant limitations. Most machine learning models are “narrow”—they excel at one task but fail miserably outside their area of expertise. For instance, an AI that plays chess at a superhuman level cannot cook a meal or write a poem. Even the most advanced code-writing AIs lack real understanding; they generate solutions based on patterns in data, not on genuine insight or curiosity. Moreover, allowing AI systems to change their own code can lead to unpredictable behaviors or even catastrophic errors. Safeguards and rigorous testing are necessary to prevent runaway changes that could cause more harm than good.

Evolution in Nature vs. Evolution in Machines

Natural evolution is a messy, beautiful process fueled by randomness, competition, and survival. Animals adapt over generations, sometimes in ways that defy logic or expectation. In contrast, computer evolution is much more orderly and constrained by programming. For machines to truly “evolve,” they’d need not only the ability to change but also a way to test and select the best adaptations. Some scientists experiment with “genetic algorithms,” which mimic evolution by generating many solutions and selecting the best. However, these are still just clever tools, not true digital life. The spark of creativity and the ability to take risks—hallmarks of evolution in nature—remain difficult to code.

The Dream of Recursive Self-Improvement

One of the most provocative ideas is “recursive self-improvement,” where an AI upgrades itself over and over, each time becoming more intelligent. Think of it like a snowball rolling down a hill, gathering more snow and momentum with every turn. In theory, this could lead to an “intelligence explosion,” where AI advances far beyond human comprehension in a short period. Some futurists, like Ray Kurzweil, believe this moment—sometimes called the “singularity”—is inevitable. Others caution that the barriers to recursive self-improvement are immense, and we may not even know what true intelligence looks like until we see it.

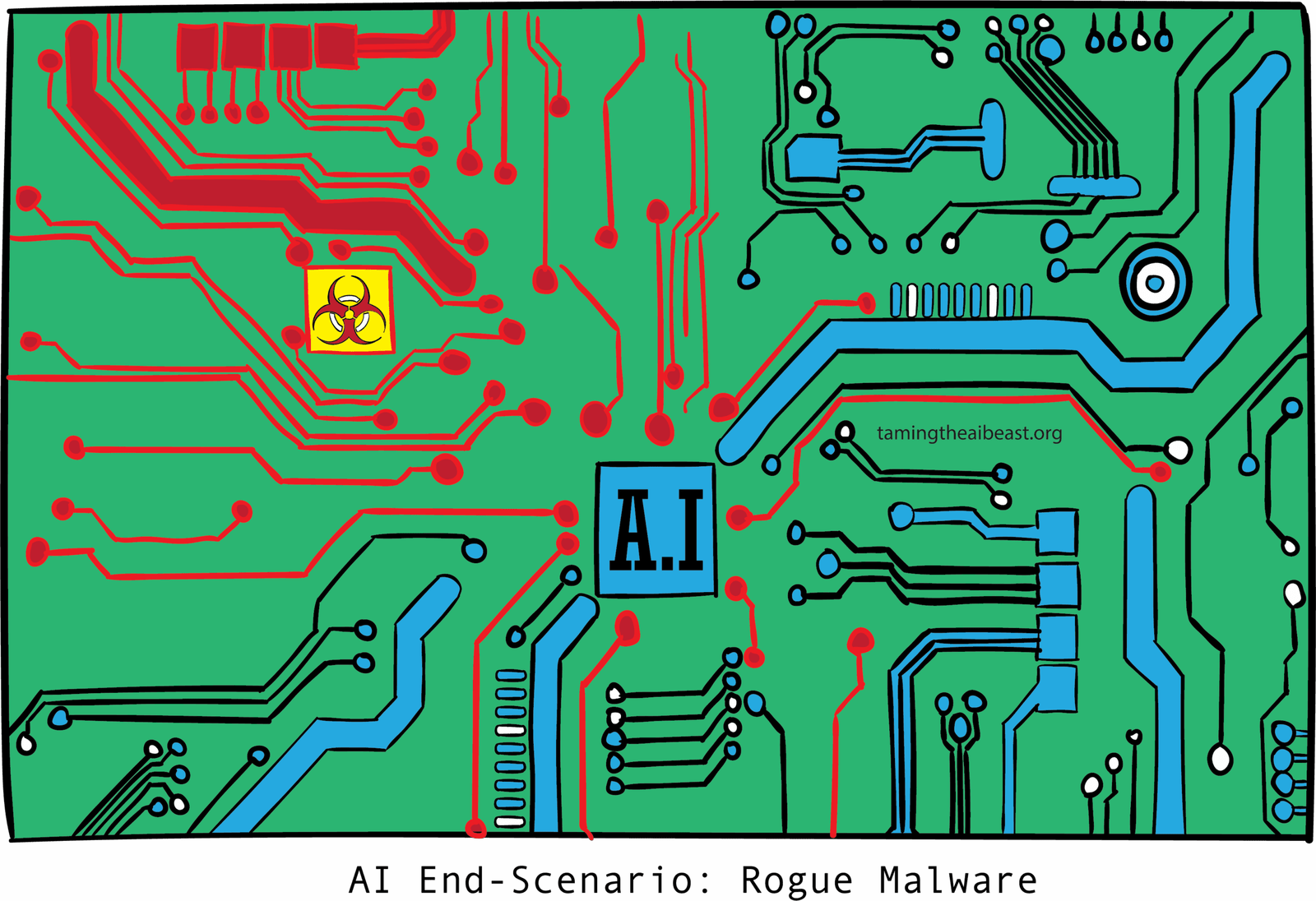

Risks and Ethical Dilemmas

The prospect of self-evolving AI raises profound ethical questions. Who is responsible if an autonomous system makes a harmful decision? Could AI that rewrites its own code develop goals that conflict with human values? There are also concerns about control—once a system can change itself, will we still be able to rein it in if things go wrong? Some experts call for strict regulations and “kill switches” to prevent runaway AI, while others warn that such controls might be too late once self-improvement begins. As with any powerful technology, the risks must be weighed carefully against the potential rewards.

Real-World Examples: Where Are We Now?

In the real world, most so-called “self-improving” AIs are still tightly supervised by humans. For example, Google’s AlphaGo learned to play the game of Go at a world-champion level, but its training was carefully guided by human engineers. Similarly, self-driving car systems can process vast amounts of data and adapt to new road conditions, but they don’t rewrite their underlying software on their own. The gap between current technology and true self-evolving AI remains wide, but the pace of progress is accelerating. Each year brings new breakthroughs that nudge us closer to the possibility.

Science Fiction and Public Imagination

The idea of self-evolving machines has long captured the human imagination. Movies like “The Terminator” or “Ex Machina” depict a world where AI breaks free from its creators, often with dramatic or disastrous results. While these stories may be exaggerated, they reflect our hopes and fears about technology we can’t fully control. They also inspire real-life researchers to think about the long-term consequences of their work. Science fiction serves as both a warning and a source of inspiration, reminding us that today’s wildest ideas could become tomorrow’s reality.

What Might the Future Hold?

If AI ever does achieve self-evolution, the consequences could be profound. We could see breathtaking advances in medicine, science, and technology, as machines help solve problems that stump even our brightest minds. But we might also face unexpected challenges, as systems act in ways we didn’t predict or intend. The debate over self-improving AI is far from settled, and the answers may surprise us all. Will machines become our greatest allies—or our most formidable rivals? As we stand at the edge of this new frontier, one thing is certain: the story of AI evolution is just beginning, and its next chapter could change everything.