Imagine a world where your job application is rejected, not because of your skills, but because an invisible program silently judged you based on your name or ZIP code. This isn’t a plot from a dystopian novel—it’s happening right now, behind the screens of banks, hospitals, and even police departments. The idea that an algorithm—a sequence of logical steps written by humans but executed by machines—can be racist sounds shocking, even absurd. But as artificial intelligence grows more powerful and pervasive, the unsettling truth is that our digital helpers can inherit, amplify, and even create new forms of bias. It’s a problem that affects millions and stirs both outrage and urgent debate in today’s tech-driven society.

What Is Algorithmic Bias?

Algorithmic bias occurs when computer systems or AI models make decisions that systematically disadvantage certain groups of people. This bias can creep in at any stage of development, from the way data is collected to the way results are interpreted. While algorithms themselves are not sentient and don’t “choose” to discriminate, their outputs can reflect, reinforce, or even worsen existing inequalities. Imagine an AI sorting through loan applications: if it’s trained on historical data that favored certain demographics, it might learn to replicate those patterns—thus continuing a cycle of unfairness. This is not just a theoretical issue; it has real-world consequences for people’s lives, opportunities, and dignity.

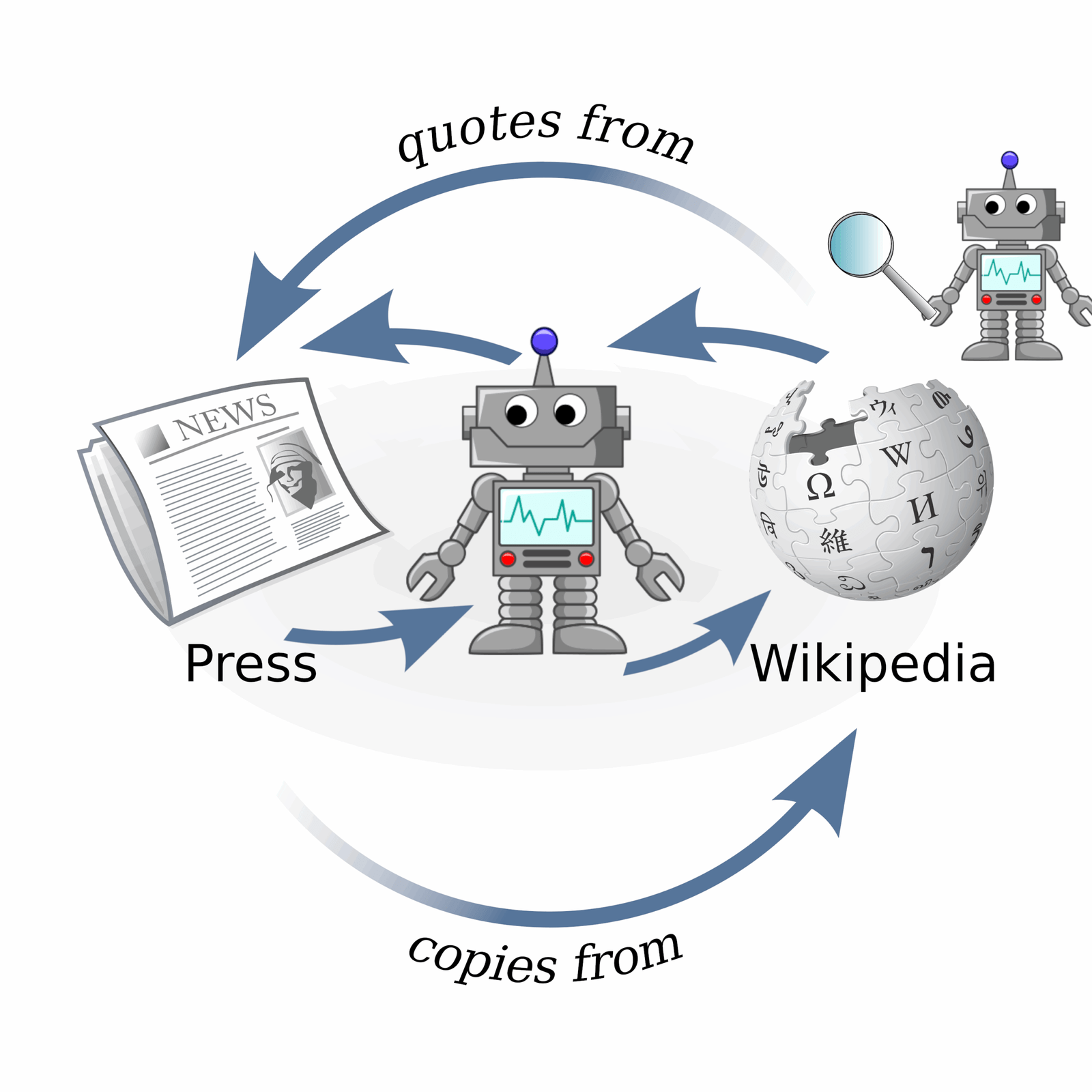

How Does Bias Enter AI Systems?

The roots of bias in AI lie in the data used to train these systems. Data is a reflection of history, culture, and sometimes prejudices. For instance, if a facial recognition system is trained mostly on images of light-skinned faces, it will likely perform poorly on darker-skinned individuals. The choices made by engineers—what data to include, which features to emphasize, and how to label information—can all embed bias, even unintentionally. Sometimes, the sheer lack of diverse perspectives within tech teams can cause blind spots, making it easy to overlook potential sources of discrimination. This is why bias is often called a “mirror” that reflects the imperfections of our society back at us.

Infamous Cases of Algorithmic Discrimination

There have been several shocking examples where algorithms produced biased or even racist outcomes. In one high-profile case, a hiring tool developed by a tech giant favored male applicants because it was trained on resumes submitted over a decade—mostly by men. Another notorious example comes from the criminal justice system, where risk assessment algorithms used by courts were found to unfairly label Black defendants as higher risk compared to white defendants with similar backgrounds. Even seemingly neutral systems, like online advertising algorithms, have been discovered to show high-paying job ads more frequently to men than women. These stories highlight the urgent need for transparency and fairness in AI.

Why “Colorblind” Algorithms Still Discriminate

It might seem logical to simply remove sensitive information like race or gender from datasets, but this doesn’t erase bias. Algorithms are crafty—they can pick up on subtle proxies for race, such as ZIP codes, income levels, or even hobbies. This means that even if race isn’t explicitly included, the algorithm might still learn to treat people differently based on linked characteristics. The result is a system that appears neutral on the surface, but reproduces the same patterns of discrimination it was supposed to eliminate. Achieving true fairness requires deeper scrutiny and more sophisticated solutions.

The Role of Human Decision-Makers

Behind every algorithm lies a team of human designers, engineers, and decision-makers. Their choices—what values to prioritize, which data to use, and how to measure success—shape the behavior of AI systems. Sometimes, these decisions are made with the best intentions but without a full understanding of the social implications. Other times, commercial pressures push companies to move fast and break things, leaving little room for ethical reflection. The responsibility for biased outcomes doesn’t rest with the algorithm alone; it’s shared by the people who build, deploy, and profit from these technologies.

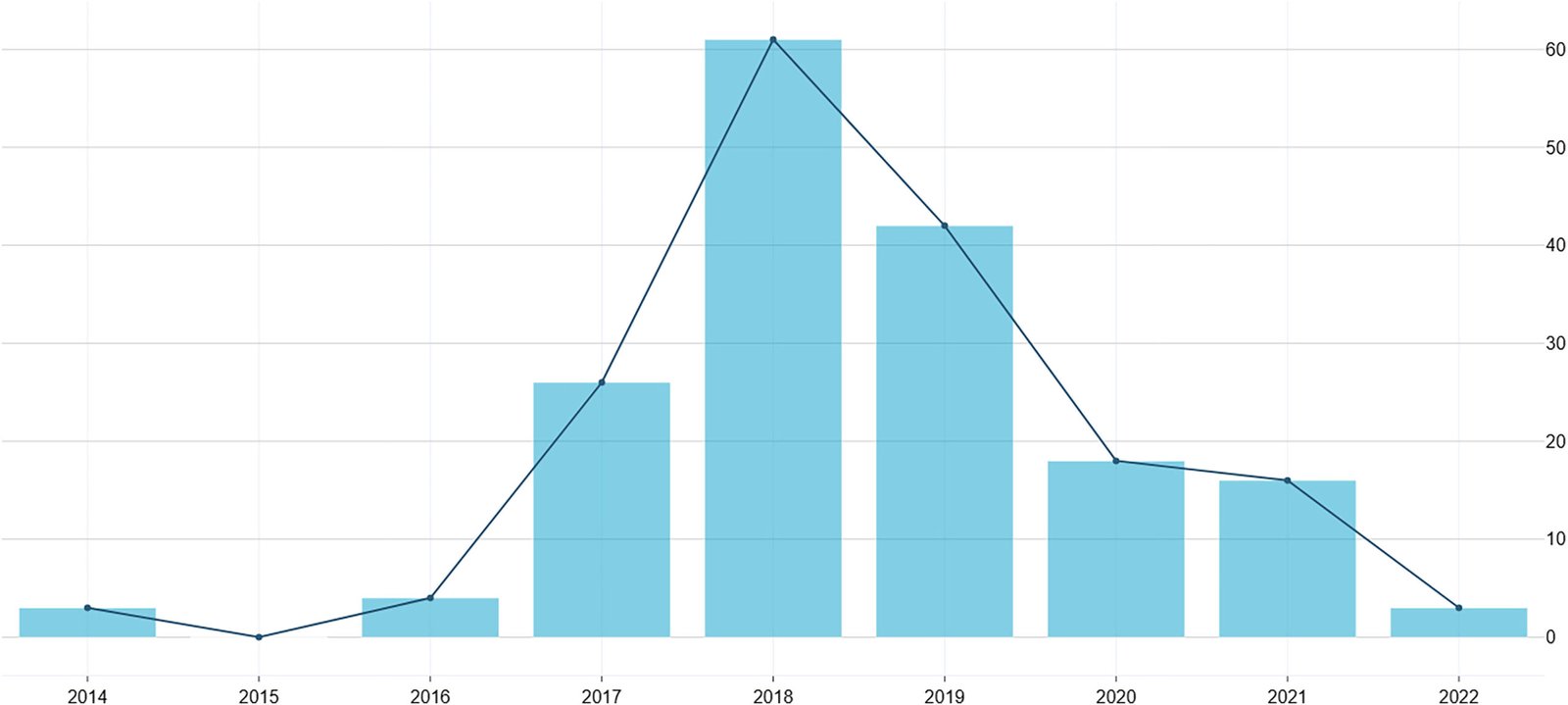

The Science Behind Detecting and Measuring Bias

Researchers have developed a variety of tools and techniques to uncover bias in algorithms. These range from statistical tests that compare outcomes across groups to more advanced methods like “fairness audits” and “counterfactual analysis.” Scientists might, for example, run an algorithm on a set of synthetic profiles that differ only by race or gender to see if decisions change. While these approaches are powerful, they are not foolproof. Measuring fairness is complicated by the fact that different definitions of fairness can lead to different results—what is fair to one group might seem unfair to another. This makes the scientific study of algorithmic bias a lively, evolving field.

Consequences for Society and Individuals

The impacts of biased algorithms are not just academic—they touch real people in profound ways. A wrongly denied loan can force a family into poverty. An unfair criminal risk score can land someone in jail. Even a recommendation system that repeatedly overlooks minority-owned businesses can damage livelihoods and limit opportunities. On a larger scale, algorithmic bias can erode trust in technology and widen existing social divides. The sense of injustice felt by those affected can spark protests, lawsuits, and demands for reform, turning a technical issue into a societal crisis.

Can Algorithms Be “Fixed”?

Tech companies and researchers are working hard to build more equitable algorithms. Solutions include diversifying training data, developing fairness metrics, and involving ethicists in the design process. Some advocate for “explainable AI,” where the reasoning behind decisions can be traced and scrutinized. Others call for regular audits and independent oversight. While progress is being made, there is no magic bullet—fixing bias is an ongoing process that requires vigilance, humility, and collaboration across disciplines. It also demands that we listen to the voices of those most affected by algorithmic decisions.

The Debate: Nature of Algorithmic Racism

Some people argue that algorithms can’t truly be racist because they lack intent or consciousness. Others believe that intent is irrelevant if the effects are harmful. This debate stirs strong emotions. For those on the receiving end of algorithmic injustice, the distinction between intent and impact can feel academic—what matters is the outcome. The conversation about algorithmic bias challenges us to rethink what fairness means in an increasingly automated world, and to ask tough questions about who gets to define the rules.

The Path Forward: Building Trust and Accountability

Restoring faith in AI will require more than technical fixes; it will take transparency, accountability, and a willingness to confront uncomfortable truths. This means opening up algorithms to public scrutiny, holding companies accountable for the impacts of their technologies, and ensuring diverse voices are heard at every stage. Education is key—people need to understand how these systems work and how they can go wrong. The stakes are high: if we get this right, AI can be a force for good. If not, we risk automating injustice on a massive scale.

Reflection on the Future of AI and Social Justice

The question of whether an algorithm can be racist forces us to confront our own biases, both human and machine. As we hurtle toward a future shaped by artificial intelligence, the choices we make today will echo for generations. Will we build systems that challenge inequality, or ones that quietly entrench it? The answer depends on all of us—scientists, engineers, activists, and everyday citizens. In the end, technology is a mirror. What kind of reflection do we want to see?