Whispers of a closed-door demo in coastal California set the mood: a handful of elite mathematicians, a whiteboard full of Olympiad-grade problems, and an AI that refused to blink. Whether or not cameras were rolling, the verified signal came from elsewhere: artificial intelligence appears poised to cross a line many thought would hold for years. Google DeepMind is expected to announce an officially graded gold-medal performance at the International Mathematical Olympiad, while OpenAI reported a comparable score under the same rules, marking a decisive moment for machine reasoning. Pair that with last year’s silver-level breakthrough and new models that train themselves to think before they speak, and a pattern emerges. Something fundamental about how we do mathematics is shifting, and fast.

The Hidden Clues

Here’s the hook: a model now solves Olympiad problems at gold-medal standard, the kind that historically separate future Fields Medalists from the rest. Twelve months earlier, DeepMind’s paired systems reached silver level by solving four of six problems; this year an advanced Gemini variant solved five of six within the competition window, with official grading. OpenAI’s team, running a separate evaluation on the same set, reported a matching gold-level score. These aren’t cherry-picked puzzles but the toughest high-school-level contests on the planet, designed to expose weak reasoning. If you were waiting for proof that AI can plan, backtrack, and craft arguments, this is it.

Just as telling are the training signals beneath the headlines. OpenAI’s 2024 work showed that giving models time to “think” dramatically boosts performance on demanding benchmarks, including exams that feed into the USA Math Olympiad. DeepMind’s latest setup layers parallel exploration on top, encouraging multiple candidate lines of argument before committing to a final proof. Together, these tricks look less like autocomplete and more like methodical problem solving. The upshot: the ceiling on machine reasoning wasn’t a ceiling at all, just a low roof we hadn’t noticed.

What Actually Happened

Let’s ground the drama in the timeline. In July 2024, DeepMind’s AlphaProof and AlphaGeometry 2 achieved a silver-equivalent at the IMO by solving four of six problems, a first-of-its-kind moment. In July 2025, an advanced Gemini with “Deep Think” scored 35 out of 42 – gold-level – and, crucially, did it end-to-end in natural language under the same time limits as students, with official certification. OpenAI has indicated potential for gold-level performance using a general-purpose model, graded by former medalists, though not as an official entrant. Even skeptics now concede the curve is pointing up – and pointing there quickly.

The supporting benchmarks deepen the picture. OpenAI’s o1 showed dramatic gains on the 2024 AIME exams, a feeder to the USA Mathematical Olympiad, vaulting from low human-baseline scores to solving the vast majority when given more time to deliberate. Meanwhile, news analysis and industry trackers have emphasized that these results rely on reinforcement learning that rewards clarity and correct reasoning, not just answers. That distinction matters because it nudges systems toward proof-like structure rather than guesswork. In short, the field is converging on training that values how you think, not just what you produce.

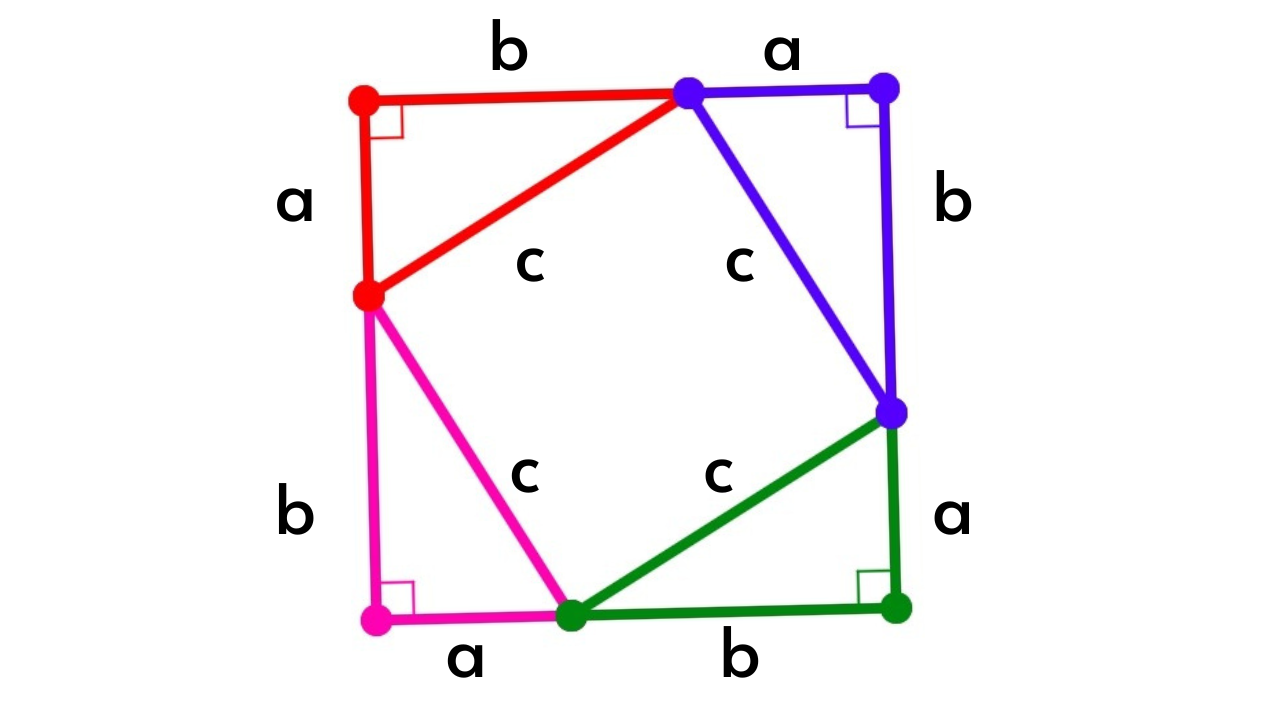

From Ancient Tools to Modern Science

Mathematics has always been a dialogue between intuition and rigor, from Euclid’s constructions to Hilbert’s formalism. Today’s twist is that proof assistants like Lean and a wave of theorem-proving research are teaching models to navigate formal logic step by step. Systems such as Lean-STaR and LeanAgent interleave natural-language “thinking” with verified tactics, turning messy brainstorming into machine-checkable progress. That hybrid style mirrors how humans sketch ideas before writing a clean proof, and it’s beginning to pay off across diverse math libraries. In other words, AI isn’t just parroting theorems – it’s learning how to move within mathematics as a living landscape.

This isn’t niche tinkering. Formal methods give us the audit trail mathematics demands, letting researchers verify each inference the way a lab replicates an experiment. As these tools spread, they promise to turn more of math into a reproducible, searchable medium, where insights travel faster and errors die quicker. That shift reframes proof not as a fragile art but as an industrial-strength pipeline for discovery. It’s a cultural change as much as a technical one.

Inside the Black Box: How the New Models Actually Think

Forget the caricature of a chatbot blurting answers; the new game is slow, deliberate cognition. OpenAI’s approach shows performance rising when models are allowed to spend more compute “thinking” sampling many internal trajectories before deciding. DeepMind’s “Deep Think” adds parallel exploration so that several proof sketches grow in tandem and are reconciled into a final, coherent argument. Combined with consensus scoring and self-verification, this looks a lot like a research group arguing with itself on fast-forward. The engineering isn’t magic – it’s disciplined search guided by learned heuristics.

A small but telling detail: last year’s silver-standard relied on translating problems into formal languages and took days of computation. This year’s gold-level run stayed in natural language and finished within the four-and-a-half-hour competition window. That leap suggests we’re moving from specialist solvers to general-purpose reasoners that can read a problem, strategize, and write a proof in the lingua franca mathematicians actually use. It’s not “understanding” in the human sense, but it rhymes with it in practice.

Why It Matters

Mathematics is the skeleton key for science and engineering, so a breakthrough in machine math is a breakthrough everywhere. Traditional computer algebra systems excel at manipulation; human mathematicians excel at inventing clever invariants and structures; the new AIs are starting to bridge the two by proposing multi-step strategies that hold up under scrutiny. That’s a big deal for fields like materials discovery, cryptography, and climate modeling where the right symbolic insight can unlock orders-of-magnitude gains. It also promises mundane but crucial dividends: fewer bugs in algorithms, better optimization in chips, more robust proofs in safety-critical code. The competitive edge will flow to labs and companies that pair human judgment with machine-scale exploration.

There’s also a cultural reckoning here. If models can ace Olympiad problems, education must emphasize conceptual depth over trick-based tactics, and research groups may shift toward curating conjectures and vetting AI-generated ideas. Some observers warn that the systems can still stumble on elementary tasks, a reminder that competence is uneven and context-sensitive. But on balance, the frontier is moving toward collaboration instead of replacement. The question isn’t whether AI belongs in the toolkit – it’s how we wield it responsibly.

Global Perspectives

The IMO is a youth contest, but it doubles as a signaling arena watched by universities and national academies worldwide. Roughly about one tenth of human contestants traditionally reach gold, which frames the significance of an AI scoring in that tier. This year, organizers confirmed one company’s AI results via official grading while acknowledging that others ran private evaluations on the same problems. That mix of formal and informal tests mirrors how labs across continents are trialing these systems on local curricula and national contests. The global message is unmistakable: frontier reasoning has gone transnational.

Education ministries and competition committees are already debating the rules of engagement. Some will treat AI like calculators once were – prohibited in contests, permitted in classrooms under guardrails. Others will move faster, integrating proof assistants into advanced courses so students learn to both read and write verifiable arguments. Expect divergence before convergence, with policy chasing capability. That’s normal in paradigm shifts.

The Future Landscape

Technically, the horizon points to tighter loops between informal reasoning and formal verification, plus architectures that can self-correct without human nudges. We should expect models that explain choices in cleaner mathematical prose and produce Lean-ready proofs by default. On the safety side, standardized, third-party grading for complex tasks – math, biology, software proofs – will move from nice-to-have to nonnegotiable. That will help separate genuine reasoning from brittle prompt tricks. The chips, data, and energy appetite will keep rising, so efficiency research matters too.

Economically, this is a lever for scientific productivity rather than a pink slip machine. Labs that pair human creativity with AI-scale search will uncover results that neither could reach alone. But the benefits won’t be automatic; access, transparency, and verification will decide who gets lifted and who gets left behind. The smartest bet is on open evaluations and shared benchmarks that keep everyone honest. That’s how science compounds.

How You Can Engage

If you’re a student, practice reading official Olympiad solutions alongside AI-generated ones to spot what makes arguments robust; the habit will sharpen both intuition and rigor. Educators can pilot formal proof tools such as Lean in upper-level courses, pairing them with human-written expositions so students see both structure and story. Researchers and engineers can contribute to open math libraries, write minimal-counterexample tests, and submit hard problem sets to independent evaluators. Policy makers can support transparent, third-party grading for high-stakes AI claims and fund reproducible research infrastructure. Curious readers can follow lab blogs and release notes with a skeptic’s eye, prioritizing results that are externally certified

Suhail Ahmed is a passionate digital professional and nature enthusiast with over 8 years of experience in content strategy, SEO, web development, and digital operations. Alongside his freelance journey, Suhail actively contributes to nature and wildlife platforms like Discover Wildlife, where he channels his curiosity for the planet into engaging, educational storytelling.

With a strong background in managing digital ecosystems — from ecommerce stores and WordPress websites to social media and automation — Suhail merges technical precision with creative insight. His content reflects a rare balance: SEO-friendly yet deeply human, data-informed yet emotionally resonant.

Driven by a love for discovery and storytelling, Suhail believes in using digital platforms to amplify causes that matter — especially those protecting Earth’s biodiversity and inspiring sustainable living. Whether he’s managing online projects or crafting wildlife content, his goal remains the same: to inform, inspire, and leave a positive digital footprint.