In living rooms, fields, and lab arenas around the world, a quiet opera is unfolding just beyond our ears. Male mice court with rapid-fire arias too high-pitched for humans to hear, while females answer with subtle shifts in posture, attention, and approach. Scientists have spent decades trying to catch these songs in the act, teasing apart how they’re produced, what they mean, and why some melodies win more hearts than others. The story is part mystery, part engineering marvel: tiny throats crafting high-speed air jets that whistle like miniature flutes. It’s also a window into brain circuits for social communication, with insights that stretch from animal behavior to human health.

The Hidden Clues

You can sit in a silent room with mice and still be surrounded by love songs. Their courtship vocals race into ultrasonic territory, often soaring well above the upper edge of human hearing and sketching spiky shapes on a spectrogram like constellations in a night sky. Each song is made of short “syllables” strung into phrases, and the patterns change with mood, arousal, and audience. I remember the first time I watched a live spectrogram during a courtship test: the screen lit up while the room stayed eerily quiet, and it felt like discovering invisible graffiti. Females notice these invisible signals, approaching the source more quickly or lingering longer when a performance hits the right notes. That responsiveness turns a private broadcast into a social dialogue, with timing and nuance that matter as much as pitch.

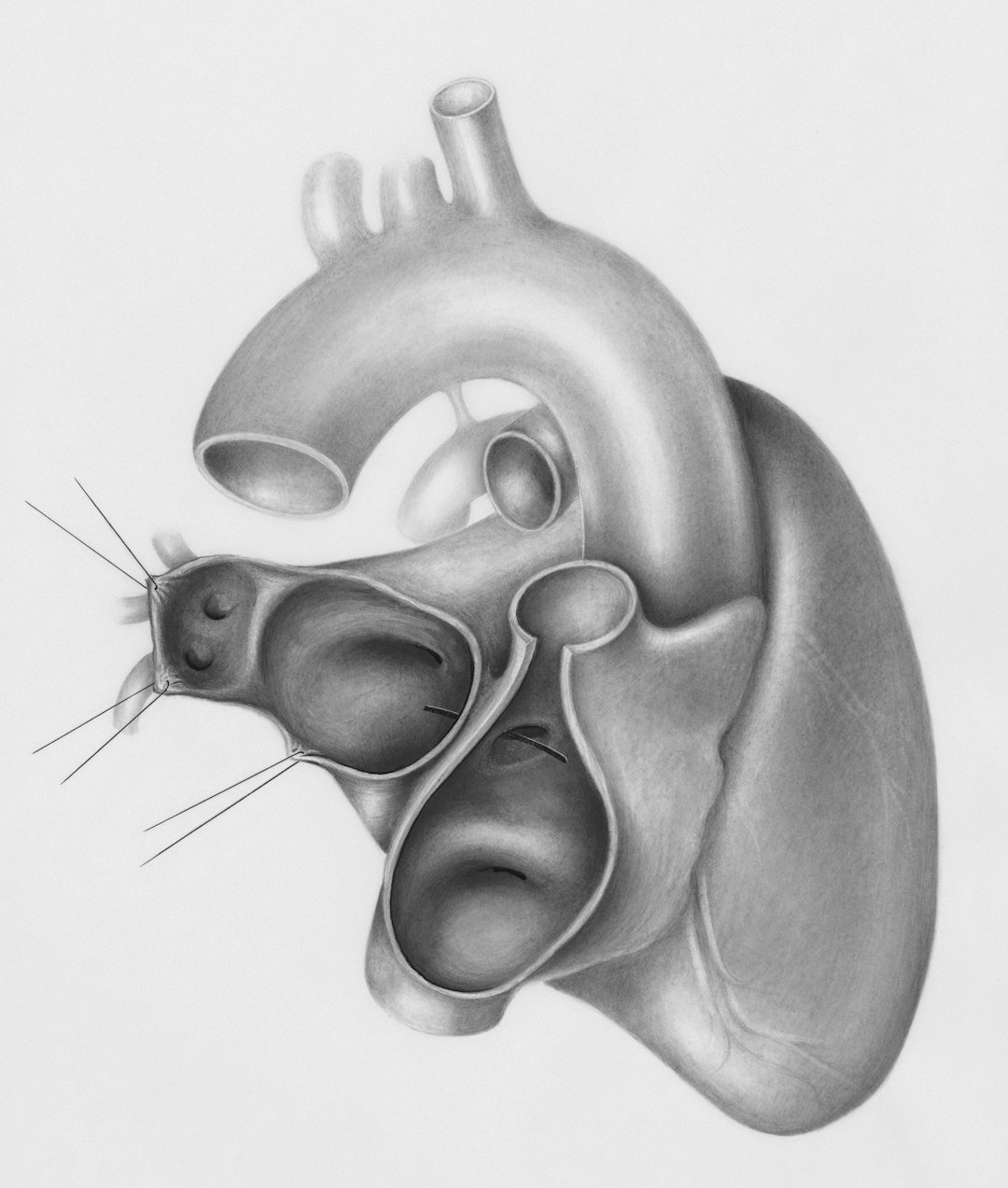

How the Songs Are Made

Unlike our own speech, which relies on vibrating vocal folds, mice push fast air through a tiny laryngeal constriction to create an aerodynamic whistle. Think of it as a microscopic flute or kettle: a jet of air strikes tissue and edges inside the larynx, and the resulting turbulence generates ultrasound. Small laryngeal muscles fine-tune the aperture and jet angle, nudging the frequency up or down and producing rapid leaps that sound – if we could hear them – like athletic falsetto trills. Breathing muscles add power and phrasing, turning individual squeaks into phrases with attack and decay. When the animal is excited, the whole system tightens, and the pitch can vault upward in a fraction of a second. The result is speed and range that feel almost superhuman, delivered by a sound source the size of a pea.

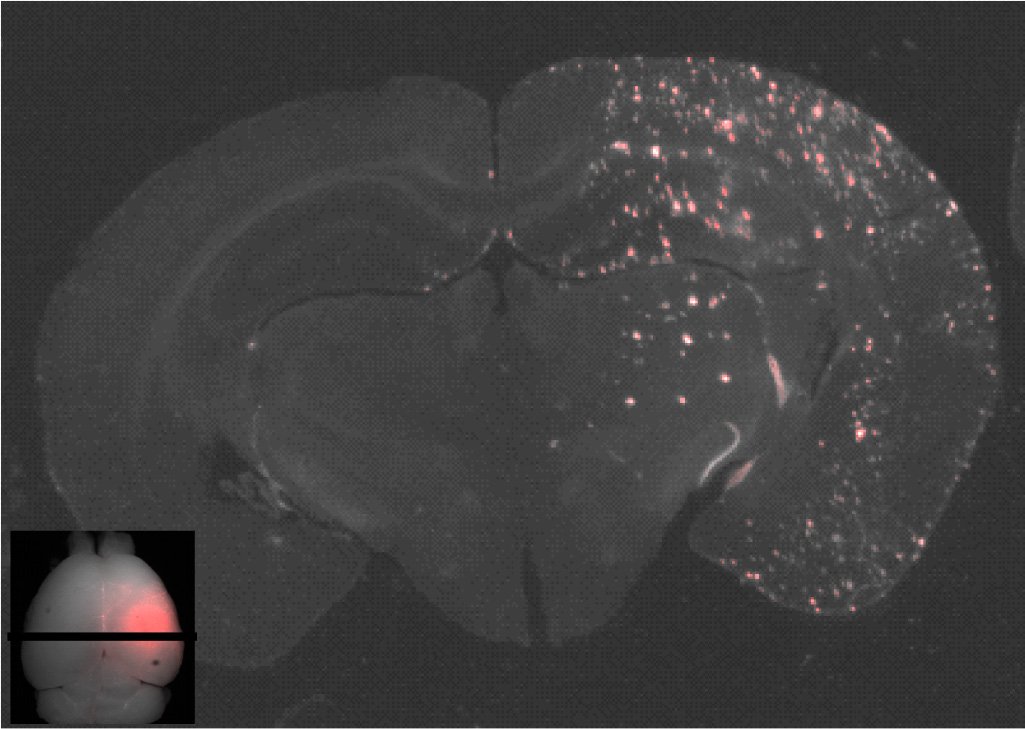

From Ancient Tools to Modern Science

Early researchers knew rodents were saying something, but their tape recorders and microphones just couldn’t catch what. The breakthrough came with ultrasonic microphones and software that paints sound as pictures, letting scientists measure pitch, rhythm, and “syntax” without hearing a thing. High-speed imaging, tiny cameras, and refined anatomical studies then pinpointed the laryngeal structures that shape the whistle, settling long-standing debates about how ultrasound is produced. Today, machine-learning models sift through tens of thousands of syllables, clustering them by shape and timing to reveal dialects across strains and labs. Optogenetic and neural-recording tools add a brain-level view, showing how motivation and social context flip the system on and off. Put together, the field has sprinted from ghostly hints to high-resolution maps of sound, tissue, and neural control.

What the Songs Mean

Context is everything: a male encountering female scent tends to produce longer, more intricate sequences than he does around rivals or alone. Individual syllables come in characteristic shapes – rises, falls, chevrons, and flat notes – and the order they occur in can change with arousal and proximity. Many experiments report that females approach faster or stay longer when songs are more dynamic, a sign that complexity carries information about the singer’s condition or intent. Pup isolation calls live in a different corner of the ultrasonic spectrum and tug powerfully on maternal behavior, proving these signals are more than noise. In crowded settings, males may vary tempo or insert pauses, as if waiting for an opening in a conversation. It’s not bird-style vocal learning, but it is sophisticated coding of state and social context.

Why It Matters

Mouse song is a noninvasive readout of the social brain, which makes it invaluable for basic biology and for modeling human communication challenges. When researchers examine strains with genetic differences relevant to neurodevelopment, they often find changes in call rate, timing, or pitch – subtle, but consistent clues to underlying circuitry. Compared with traditional behavioral tests that rely on human scoring, ultrasound analysis can be faster, more objective, and sensitive to patterns our ears would miss. That means better early screening in lab studies, tighter links between genes and behavior, and more reliable comparisons across labs. It also helps separate myths from mechanisms, clarifying that mice mostly don’t learn songs the way birds do, even though they can reshape performance with state and context. In practical terms, the songs compress motivation, arousal, and intent into signals that can be measured and modeled.

Global Perspectives

House mice live almost everywhere we do, from farm sheds to high-rise apartments, and their communication has adapted to wildly different acoustic backdrops. In quiet lab rooms, syllables can ring out in long strings; in noisy environments, calls may shorten or cluster around windows of opportunity. Field recordings – still a logistical challenge – reveal that wild mice can be far less chatty than their lab cousins, highlighting the need to test findings beyond controlled arenas. Cross-lab collaborations are now building shared datasets and standardizing analysis pipelines so a “syllable” means the same thing in Boston and Berlin. Ethical advances also matter: better recording setups reduce stress, and husbandry that respects natural behavior yields richer, more representative data. The payoff is a truer picture of how a global commensal species keeps its conversations going.

The Future Landscape

Next-generation systems will likely combine miniature wearable microphones, beamforming arrays, and on-animal motion sensors to map who is singing to whom in real time. Algorithms already label syllables automatically; the next step is decoding them in context, predicting intent from patterns with the kind of confidence that invites causal tests. Standarded benchmarks and shared, annotated corpora will be crucial so models don’t overfit to a single strain or recording room. At the biomechanical level, improved imaging will push deeper into the larynx to watch jet formation as it happens, linking muscle twitches to instantaneous frequency changes. Beyond the lab, the technology could inform humane pest management by exploiting natural communication rather than poison or traps. The central challenge is to keep the data honest: transparent methods, open code, and careful biology must guide every shiny new tool.

What You Can Do

If the idea of an invisible love song fascinates you, there are accessible ways to tune in and support the science. Community labs and some nature centers offer ultrasonic microphones you can borrow, and with a laptop spectrogram app you can visualize calls at home in a quiet, humane observation setup. Consider backing open-data projects that share annotated recordings, because pooled datasets speed discovery and reduce waste across labs. If you keep pet mice, enrich their space with hiding spots and consistent handling; less stress often means more natural communication and better welfare. And when you read about mouse behavior tied to human health, look for studies that provide clear acoustic definitions and open analysis pipelines. Curiosity, plus a little skepticism and support for open science, helps keep the conversation honest and moving forward.

Suhail Ahmed is a passionate digital professional and nature enthusiast with over 8 years of experience in content strategy, SEO, web development, and digital operations. Alongside his freelance journey, Suhail actively contributes to nature and wildlife platforms like Discover Wildlife, where he channels his curiosity for the planet into engaging, educational storytelling.

With a strong background in managing digital ecosystems — from ecommerce stores and WordPress websites to social media and automation — Suhail merges technical precision with creative insight. His content reflects a rare balance: SEO-friendly yet deeply human, data-informed yet emotionally resonant.

Driven by a love for discovery and storytelling, Suhail believes in using digital platforms to amplify causes that matter — especially those protecting Earth’s biodiversity and inspiring sustainable living. Whether he’s managing online projects or crafting wildlife content, his goal remains the same: to inform, inspire, and leave a positive digital footprint.