Picture this: you’re talking to your phone, asking for directions, and it completely butchers your request because it can’t understand your Southern drawl or Boston accent. Frustrating, right? Well, artificial intelligence researchers have been working tirelessly to bridge this gap, creating sophisticated programs that can decode the beautiful tapestry of American regional speech patterns. These groundbreaking AI systems are revolutionizing how machines understand human communication across the diverse linguistic landscape of America.

The Science Behind Accent Recognition Technology

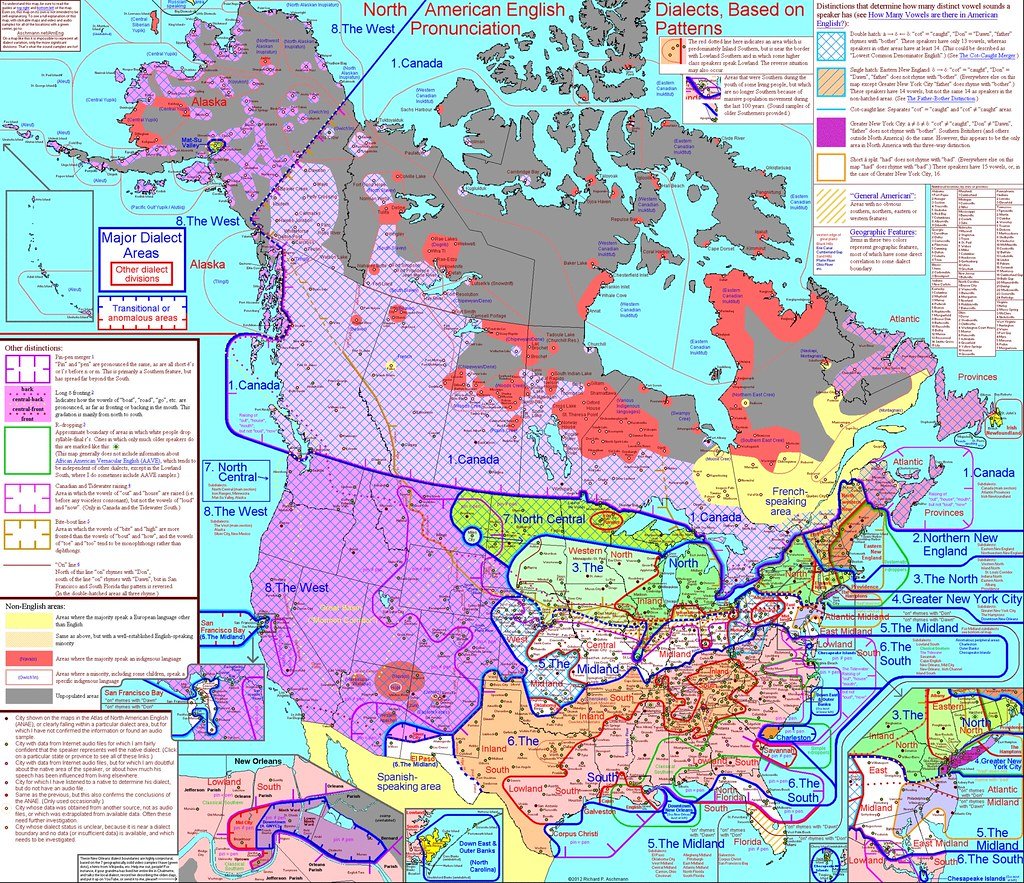

Training AI to understand regional accents isn’t just about teaching machines different ways to say “park the car.” It’s a complex process that involves analyzing thousands of hours of speech data, identifying subtle phonetic variations, and mapping these differences to create comprehensive linguistic models. Scientists use deep learning algorithms that can detect minute changes in vowel pronunciation, consonant shifts, and rhythm patterns that make a Boston accent sound completely different from a Texas twang. The technology relies on neural networks that process acoustic signals in ways that mirror how our brains interpret speech. These systems learn to recognize that when someone from Minnesota says “about,” it might sound more like “aboot” to untrained ears, but the AI learns to connect these variations to the same underlying word.

Program #1 – DeepSouth AI Dialect Processor

DeepSouth represents one of the most ambitious projects in accent recognition, specifically designed to understand the rich variety of Southern American dialects. Developed by researchers at Georgia Tech, this AI system was trained on over 50,000 hours of speech from across the American South, including everything from Louisiana Creole influences to Appalachian mountain dialects. The program can distinguish between a Charleston accent and a Nashville twang with remarkable accuracy, recognizing subtle differences like the way Southerners might pronounce “pen” and “pin” identically. What makes DeepSouth particularly impressive is its ability to understand context clues, so when someone says “I’m fixin’ to go,” it knows they mean they’re about to leave. The system has been integrated into customer service applications across the region, dramatically improving communication between automated systems and Southern speakers.

Program #2 – NorthEast Voice Recognition System

The NorthEast Voice Recognition System tackles some of the most challenging accents in America, from the dropped R’s of Boston to the distinctive vowel shifts of Philadelphia. MIT researchers spent years collecting speech samples from New York boroughs, Massachusetts towns, and Philadelphia neighborhoods to create this sophisticated program. The AI learned to decode phrases like “I pahked the cah in Hahvahd Yahd” and translate them for broader understanding. Perhaps most impressively, the system can differentiate between subtle neighborhood variations within major cities – it knows the difference between a Brooklyn accent and a Bronx accent, or between South Philly and North Philly speech patterns. This program has found applications in emergency services, where understanding regional speech patterns can literally be a matter of life and death.

Program #3 – Midwest Dialect Detection Engine

Don’t let anyone tell you Midwesterners don’t have accents – the Midwest Dialect Detection Engine proves otherwise by recognizing the subtle but distinct speech patterns across America’s heartland. This AI system was trained to pick up on the famous Midwest “ope” (that little sound people make when they bump into something), the way vowels get stretched in words like “bag” and “flag,” and the distinctive rhythm of Upper Midwest speech influenced by Scandinavian and German heritage. The program excels at understanding the polite, indirect communication style common in the region, where “that’s interesting” might actually mean “I completely disagree.” Researchers from the University of Wisconsin and University of Minnesota collaborated on this project, feeding the AI thousands of conversations from county fairs, hockey games, and coffee shops across the region. The system has proven invaluable for improving voice assistants in rural areas and small towns where standard AI often struggles.

Program #4 – Western American Speech Intelligence

The Western American Speech Intelligence program covers the vast and varied linguistic landscape from California surfer speak to Rocky Mountain dialects. Stanford University researchers created this system to handle everything from Valley Girl uptalk to the distinctive speech patterns of rural Montana and Wyoming. The AI learned to recognize the laid-back California vocal fry, the way Pacific Northwest speakers might say “bag” with a slightly different vowel sound, and the unique expressions that pepper Western American English. What’s fascinating about this program is how it handles the rapid evolution of California slang and tech-influenced language patterns emerging from Silicon Valley. The system continuously updates its understanding of new expressions and speech trends, making it one of the most adaptive accent recognition programs available. It’s currently being used in entertainment applications and customer service platforms across the western United States.

Program #5 – Texas-Specific Language Processing System

Everything’s bigger in Texas, including the challenge of understanding the state’s distinctive dialect – which is why researchers at the University of Texas at Austin created a dedicated AI system just for Lone Star State speech patterns. This program was trained on the unique blend of Southern drawl, Mexican Spanish influences, and cowboy vernacular that makes Texas English so distinctive. The AI can understand expressions like “all y’all,” recognize when someone’s “fixin’ to” do something, and interpret the subtle differences between East Texas pine country speech and West Texas ranch talk. The system also handles the bilingual code-switching common in areas with large Hispanic populations, seamlessly processing conversations that blend English and Spanish. Oil companies and tech firms across Texas have adopted this technology to improve communication in diverse workplaces where understanding regional speech patterns is crucial for safety and efficiency.

Training Methodologies and Data Collection

Building these accent recognition systems requires massive amounts of diverse speech data, collected through innovative methods that respect privacy while capturing authentic regional speech patterns. Researchers partner with local radio stations, conduct voluntary recording sessions at community events, and analyze publicly available speech databases to gather their training materials. The process involves careful demographic balancing to ensure the AI learns from speakers of different ages, educational backgrounds, and socioeconomic levels within each region. Scientists use advanced audio processing techniques to clean and categorize this data, marking specific phonetic features and linguistic patterns that characterize each accent. The training process can take months or even years, with AI systems processing millions of speech samples to build accurate models of regional pronunciation patterns and vocabulary usage.

Challenges in Regional Speech Recognition

Understanding regional accents presents unique challenges that go far beyond simple pronunciation differences, requiring AI systems to grasp cultural context and social nuances embedded in speech patterns. One major hurdle is the way accents can shift within the same conversation – someone might speak more formally with their boss but slip into heavier dialect when talking to family members. AI systems must also contend with generational differences, as younger speakers might blend traditional regional accents with influences from social media and global communication. The challenge becomes even more complex in areas where multiple cultural influences converge, creating hybrid accents that combine elements from different linguistic traditions. Researchers constantly work to improve these systems’ ability to handle code-switching, slang evolution, and the subtle emotional undertones that regional speech patterns can convey.

Real-World Applications and Success Stories

These accent recognition programs are already making significant impacts across various industries, from healthcare to emergency services to customer support systems. In hospitals across the South, DeepSouth AI helps medical staff understand patients who might struggle to communicate clearly during stressful situations, potentially saving lives by ensuring critical information isn’t lost due to accent barriers. Emergency dispatch centers in major cities use these systems to better understand callers during crisis situations, where every second counts and clear communication is essential. Call centers have reported dramatic improvements in customer satisfaction when their AI systems can properly interpret regional speech patterns, leading to fewer frustrating misunderstandings and more efficient problem resolution. Even entertainment companies use these technologies to create more realistic voice-controlled gaming experiences and virtual assistants that feel more natural to users from different regions.

Cultural Preservation Through Technology

Beyond practical applications, these AI programs serve as digital archives, preserving the rich linguistic heritage of American regional dialects for future generations. Many traditional accent features are disappearing as younger generations adopt more standardized speech patterns influenced by mass media and digital communication. These AI systems capture subtle pronunciation differences, unique expressions, and cultural speech patterns that might otherwise be lost to time. Linguists are using the data collected for these programs to study how American English continues to evolve and change across different regions. The technology essentially creates a linguistic time capsule, documenting how people actually speak in different parts of America during this particular moment in history, providing invaluable resources for future researchers studying language evolution.

The Role of Machine Learning in Accent Processing

Machine learning algorithms form the backbone of these accent recognition systems, using sophisticated neural networks that can identify patterns humans might miss entirely. These AI systems employ multiple layers of analysis, starting with basic sound recognition and building up to complex contextual understanding of regional speech patterns. The algorithms continuously refine their understanding through exposure to new speech samples, becoming more accurate over time as they encounter diverse speakers from each region. Deep learning techniques allow these systems to recognize not just what words are being said, but how the speaker’s regional background influences their pronunciation, rhythm, and word choice. The most advanced systems can even detect emotional states and intentions behind regional speech patterns, understanding when someone’s accent becomes more pronounced due to stress, excitement, or other factors.

Ethical Considerations and Bias Prevention

Developing accent recognition technology raises important questions about linguistic bias and the potential for these systems to perpetuate harmful stereotypes about regional speech patterns. Researchers work carefully to ensure their AI systems don’t make assumptions about speakers’ intelligence, education level, or social status based on their accents. The training process includes deliberate efforts to include diverse voices from each region, representing different socioeconomic backgrounds, education levels, and cultural identities to prevent biased outcomes. Scientists also grapple with questions about accent “correction” versus accent “recognition” – these systems are designed to understand regional speech, not to suggest that any particular way of speaking is superior to others. Privacy concerns also play a major role, as researchers must balance the need for comprehensive speech data with individuals’ rights to control how their voice patterns are used and stored.

Technical Limitations and Ongoing Research

Despite impressive advances, current accent recognition technology still faces significant limitations that researchers are actively working to overcome through innovative approaches and improved algorithms. One major challenge is handling speakers who have lived in multiple regions and developed hybrid accents that blend features from different areas – these complex speech patterns can confuse AI systems trained on more distinct regional categories. The technology also struggles with speakers who consciously modify their accents in different social situations, a phenomenon known as code-switching that’s common in many communities. Background noise, phone line quality, and emotional states can all interfere with accurate accent recognition, leading to misunderstandings when the technology is most needed. Researchers are developing more robust systems that can maintain accuracy even under challenging acoustic conditions, while also working to expand recognition capabilities to include smaller regional variations and emerging accent patterns.

Integration with Existing Voice Technologies

The integration of regional accent recognition into existing voice technologies represents a major leap forward in making AI more inclusive and accessible to diverse American communities. Major tech companies are incorporating these specialized programs into their virtual assistants, smart home devices, and voice-controlled applications to better serve users from different regions. The process involves careful calibration to ensure these accent recognition capabilities work seamlessly with existing voice processing systems without creating new bugs or reducing overall performance. Engineers face the challenge of balancing regional specificity with computational efficiency, as processing multiple accent models simultaneously requires significant computing power. These integrated systems are being tested in real-world environments across different regions, with continuous feedback helping developers refine the technology to work better for everyday users who rely on voice-controlled devices in their homes and workplaces.

Future Developments and Emerging Trends

The future of regional accent recognition technology promises even more sophisticated capabilities, including real-time accent adaptation and cross-cultural communication assistance that could revolutionize how we interact with AI systems. Researchers are developing next-generation programs that can learn new accent patterns on the fly, adapting to individual speakers’ unique speech characteristics while maintaining understanding of broader regional patterns. Emerging trends include the integration of visual cues with audio processing, allowing AI systems to better understand speech by observing lip movements and facial expressions alongside regional accent patterns. Scientists are also exploring the potential for these systems to facilitate better communication between speakers of different regional dialects, essentially serving as real-time interpreters for distinctly American speech variations. The technology may soon expand beyond English to handle multilingual accent patterns common in immigrant communities, creating more inclusive AI systems that reflect America’s linguistic diversity.

Economic Impact and Market Applications

The economic implications of regional accent recognition technology extend far beyond tech companies, creating new opportunities and improving efficiency across multiple industries that rely on clear communication. Businesses operating across different regions report significant cost savings when their automated systems can understand customers’ regional speech patterns, reducing the need for human intervention in routine interactions. The technology has created new job opportunities for linguists, voice coaches, and AI trainers who specialize in regional speech patterns, while also making existing customer service positions more effective. Market research firms use these systems to better analyze consumer feedback from different regions, gaining insights into regional preferences and cultural attitudes that inform business strategies. The healthcare industry has seen particularly strong returns on investment, as improved communication with patients from different regions leads to better care outcomes and reduced liability risks from miscommunication.

Global Perspectives on American Accent Recognition

International companies and organizations are increasingly interested in American regional accent recognition technology as they seek to better communicate with diverse American audiences and partners. Foreign businesses operating in the United States find these systems invaluable for training their staff to understand American customers and colleagues from different regions, improving business relationships and reducing cultural misunderstandings. The technology also helps international students and immigrants who are learning to navigate American English in its various regional forms, providing practical tools for understanding the speech patterns they encounter in different parts of the country. Academic institutions worldwide are studying these American accent recognition programs to develop similar technologies for their own linguistic landscapes, recognizing the broader applications for preserving and understanding regional speech variations. This global interest has sparked collaborative research projects that combine American accent recognition expertise with international linguistic research, creating more comprehensive understanding of how AI can bridge communication gaps across different cultures and regions.

These five AI programs represent just the beginning of a technological revolution that promises to make digital communication more inclusive and effective for speakers from all corners of America. As these systems continue to evolve and improve, they’re not just solving technical problems – they’re celebrating the rich diversity of American speech while ensuring that everyone’s voice can be heard and understood in our increasingly digital world. What accents do you think will be the next frontier for AI recognition technology?