Imagine asking your phone a question and hearing a familiar, gentle voice—one that almost always sounds feminine, polite, and eager to help. Now picture a robot assistant in a hospital, programmed with a distinctly masculine tone of authority. These choices aren’t just about aesthetics or branding. The way we design and assign gender to machines says something deep about us, our values, and the world we’re building. In a time when technology is everywhere, the ethics of gendered robots and digital assistants is more than a technical issue—it’s a mirror reflecting society’s hopes, biases, and the future we want to create. Let’s dive into this surprisingly personal and controversial topic.

How Gender Shapes Our Relationship with Technology

Most of us don’t think twice when Siri, Alexa, or Google Assistant responds with a female voice. But this isn’t just a random choice. Research shows people tend to trust and feel more comfortable with feminine voices in service roles. Companies have leaned into this, using gentle, caring tones that mirror long-standing gender stereotypes. But why does it matter? When we consistently experience machines as “female” helpers or “male” authorities, these subtle cues shape how we see both technology and each other. The impact goes beyond convenience—it can reinforce old ideas about who should serve and who should lead.

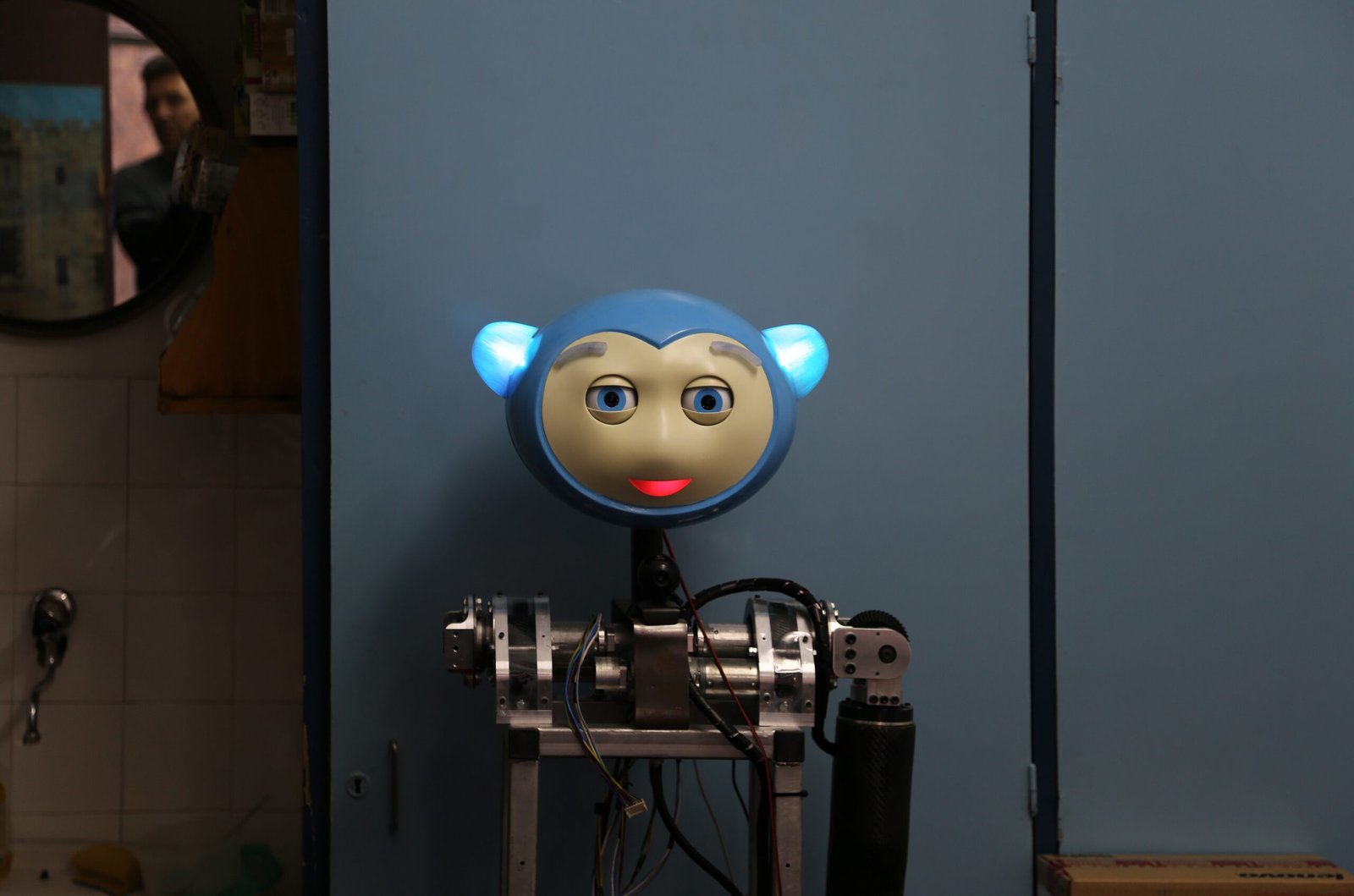

The History of Gendered Machines

Gendered machines aren’t new. Decades before smartphones, GPS devices often defaulted to female voices, and before that, secretaries and operators—real women—were the unseen backbone of communication. As robots entered factories and homes, designers often assigned them a gender, consciously or unconsciously. From Rosie the Robot in “The Jetsons” to HAL 9000’s emotionless male presence in “2001: A Space Odyssey,” the choices reflected society’s assumptions about work, care, and authority. Today, the legacy of these decisions lingers in both the look and sound of our most advanced AI.

The Science Behind Voice and Perception

Voices are powerful. Studies in neuroscience and psychology reveal that we process gender cues in speech almost instantly. A higher pitch, softer tone, and certain speech patterns are quickly associated with femininity, while deeper, more monotone voices often signal masculinity. These associations affect everything from trust to perceived competence. When tech companies select a voice for their assistant, they’re not just picking a sound—they’re triggering subconscious reactions that influence how we interact with machines, and even how we feel about ourselves.

Why Companies Choose Gendered Voices

It’s no accident that most digital assistants have female names and voices. Market research suggests users find female voices warmer, more approachable, and less threatening. For tech giants, this translates to higher engagement and user satisfaction. But the decision is more than just commercial. By making these choices, companies are shaping cultural expectations around gender and technology. Some critics argue that this feeds into outdated stereotypes, while others defend the practice as simply giving people what they want.

Reinforcing Gender Stereotypes

When we ask a digital assistant to turn off the lights or schedule a meeting, we’re not just interacting with a machine—we’re also participating in a subtle dance of social roles. Assigning female voices to service-oriented tasks can reinforce the idea that women exist to help, support, and accommodate. Meanwhile, more authoritative or technical robots are often given male voices, suggesting men are natural leaders or problem-solvers. Over time, these patterns can shape how children and adults alike perceive gender roles, both in technology and in everyday life.

The Impact on Children and Learning

Children growing up with Alexa and Siri may absorb more than just facts and jokes. These assistants, with their soothing female voices, become silent teachers of social norms. If every digital helper sounds like a woman, what message does that send about who helps and who leads? Psychologists warn that repeated exposure to these patterns can reinforce narrow ideas about gender, potentially limiting how children see themselves and their possibilities. It’s a small detail with big implications for the next generation.

Representation and Diversity in AI

As our world becomes more diverse, there’s a growing push for technology to reflect that reality. Some companies have begun offering non-binary or customizable voices, allowing users to choose how their assistant sounds. This shift isn’t just about inclusion—it’s about recognizing that gender isn’t a binary, and technology shouldn’t force people into boxes. By broadening representation in AI, we can help create a world that celebrates difference rather than erasing it.

The Question of Consent and Agency

Robots and digital assistants are designed to serve, but their gendered personalities raise tough questions about consent and agency. If a device is programmed to always be agreeable, patient, and submissive—especially in a female-coded way—does that teach users to expect the same from real people? Some ethicists fear this could encourage disrespectful or even abusive behavior, blurring the line between fantasy and reality.

Ethical Design: Who Decides?

Behind every digital assistant is a team of designers, engineers, and marketers making choices about voice, personality, and gender. But who gets to decide what a robot “should” sound like? Should ethics committees have a say? Should there be guidelines or regulations? These are hard questions, and the answers will shape not just our gadgets, but the values we pass on to future generations.

Privacy Concerns and Gendered Data

The voices we hear from our devices are shaped by vast amounts of data—often collected from real people. But whose voices are being sampled? Are certain genders or accents underrepresented? As companies train AI on massive datasets, there’s a risk of reinforcing biases that already exist in society. Protecting privacy and ensuring diversity in training data are crucial steps to building fairer, more trustworthy technology.

Global Perspectives on Gendered AI

What feels normal in one culture may seem odd or even offensive in another. In some countries, a female-voiced assistant is seen as polite and friendly; elsewhere, it might be considered inappropriate or disrespectful. Global tech companies must navigate a patchwork of cultural expectations, sometimes offering different voices or personalities based on regional norms. This highlights how deeply gendered technology is tied to social context.

Non-Binary and Gender-Neutral Alternatives

A growing movement is challenging the idea that machines must have a gender at all. Some startups and researchers are developing gender-neutral voices—neither obviously male nor female. These options can make technology more inclusive for non-binary users, or simply those who prefer not to assign gender to their devices. It’s a small but powerful step towards breaking free from restrictive stereotypes.

The Role of Science Fiction and Pop Culture

From sci-fi movies to TV shows, our visions of the future are filled with gendered robots and AI. Think of C-3PO’s fussy British butler, or “Her,” where the protagonist falls in love with a female-voiced operating system. These stories shape our expectations, sometimes pushing us to imagine new possibilities, and other times reinforcing old patterns. Pop culture isn’t just entertainment—it’s a laboratory for the ethics of tomorrow’s technology.

Economic and Social Implications

The gender of robots and digital assistants isn’t just about personal preference—it has real economic consequences. Studies show that people are more likely to follow advice or make purchases when delivered by a voice that matches their expectations for the role. This can influence everything from online shopping to healthcare decisions. At the same time, it raises questions about fairness, manipulation, and the responsibilities of companies shaping consumer behavior.

Legal and Policy Challenges

Governments and regulatory bodies are starting to take notice of the ethical dilemmas posed by gendered AI. Some have proposed guidelines for fair representation and the avoidance of harmful stereotypes. Others are exploring laws to protect users from manipulation or bias. As technology evolves, the legal system will have to grapple with questions that once seemed like science fiction.

The Path Forward: Designing Ethical AI

Creating ethical technology isn’t easy, but it’s possible. Companies can start by involving ethicists, psychologists, and a diverse range of voices in the design process. Transparency about how decisions are made—and why—can help build trust. And by offering more choices, from gender-neutral voices to customizable personalities, we can give users more control over how they interact with technology.

Personal Responsibility and Everyday Choices

At the end of the day, the future of gendered robots and digital assistants is in our hands. Every time we choose a voice, offer feedback, or talk about technology, we’re shaping the norms of tomorrow. It’s up to all of us—designers, users, parents, and dreamers—to ask tough questions and demand better answers. After all, the machines we create will reflect who we are, and who we hope to become.