From hospital wards to wildfire watchtowers, a quiet series of showdowns has been unfolding where algorithms square off against skilled people. These aren’t flashy demos; they’re careful tests with real stakes: lives, land, money, and trust. The twist is that victories cut both ways, revealing strengths, blind spots, and a messy middle where hybrids often win. Here’s what’s actually happening inside eight under-the-radar contests – and why the results matter far beyond any scoreboard.

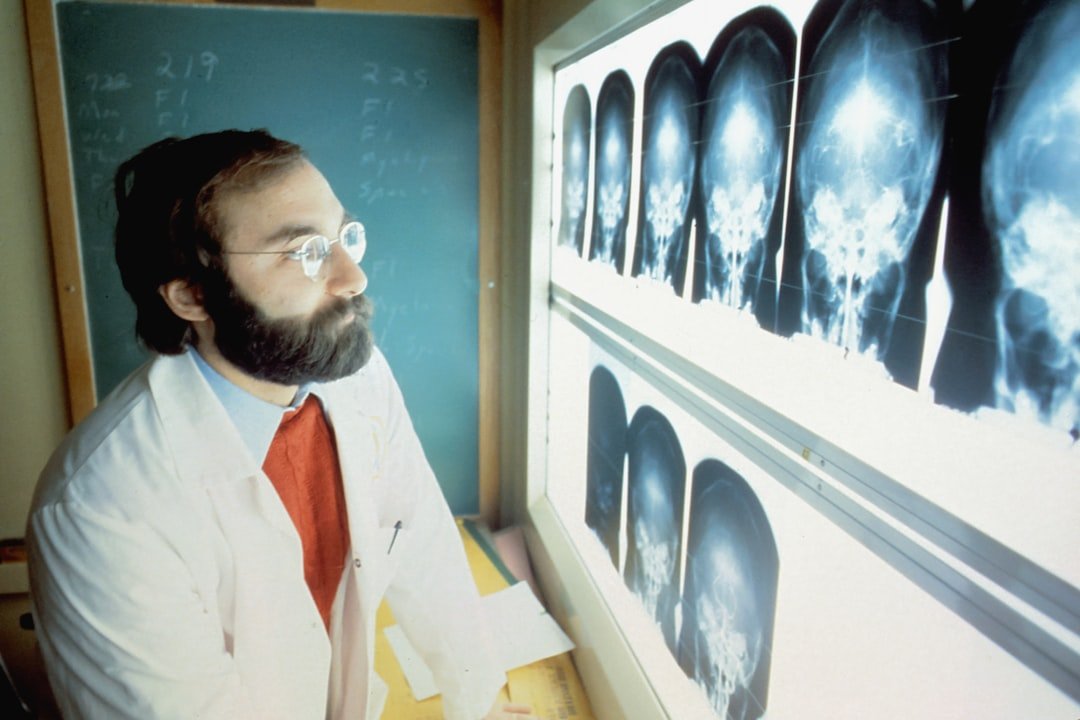

The Hidden Clues: AI Mammography vs Human Radiologists

What if a second pair of eyes never blinked and never got tired? In multiple European screening programs, AI has stepped in as an independent reader, matching human double-reading performance while slashing workload roughly by half. The technology excels at consistency across long shifts, flagging subtle distortions and faint calcifications that can be easy to overlook late in the day.

But radiologists aren’t out of the loop; they’re moving into higher-judgment roles – arbitrating tricky cases, talking with patients, and managing follow-ups. The early pattern is clear: AI reduces misses and repetitive strain, while humans handle nuance, uncertainty, and responsibility. When both collaborate, recall rates and time-to-diagnosis trend in the right direction without flooding clinics.

Eyes on the Sky: AI Weather Models vs Veteran Forecasters

For decades, global forecasts were powered by physics-heavy numerical models that gulp supercomputing hours. Now, AI systems trained on years of reanalysis data can spit out global predictions in minutes and often rival traditional skill from short to medium range. Meteorologists are learning to cross-check AI guidance the way a pilot reads multiple instruments.

Where storms get weird – rapid intensification, compound floods, cut-off lows – humans still interrogate patterns, ensembles, and local effects. The emerging workflow looks pragmatic: AI for speed and broad skill, people for pattern sanity and risk communication. Faster turnaround means earlier warnings, and in weather, an extra hour can save neighborhoods.

The Quiet Watchers: AI Wildfire Spotters vs Lookout Towers

On ridgelines across the American West, cameras now sweep canyons while algorithms sift thousands of frames for the first hint of smoke. In several incidents, automated alerts have pinged dispatchers minutes before 911 calls arrived, buying precious time for initial attack. The machines never nap; they just feed a steady stream of “possible smoke” snapshots to human eyes.

False alarms still happen – dust, fog, and low clouds can fool even good models – so fire crews keep a human in the loop to verify. The win is in the handoff: AI narrows the haystack; dispatchers and rangers decide when to roll engines. In a summer where wind can turn a spark into a disaster, those minutes are the difference between a spot fire and headlines.

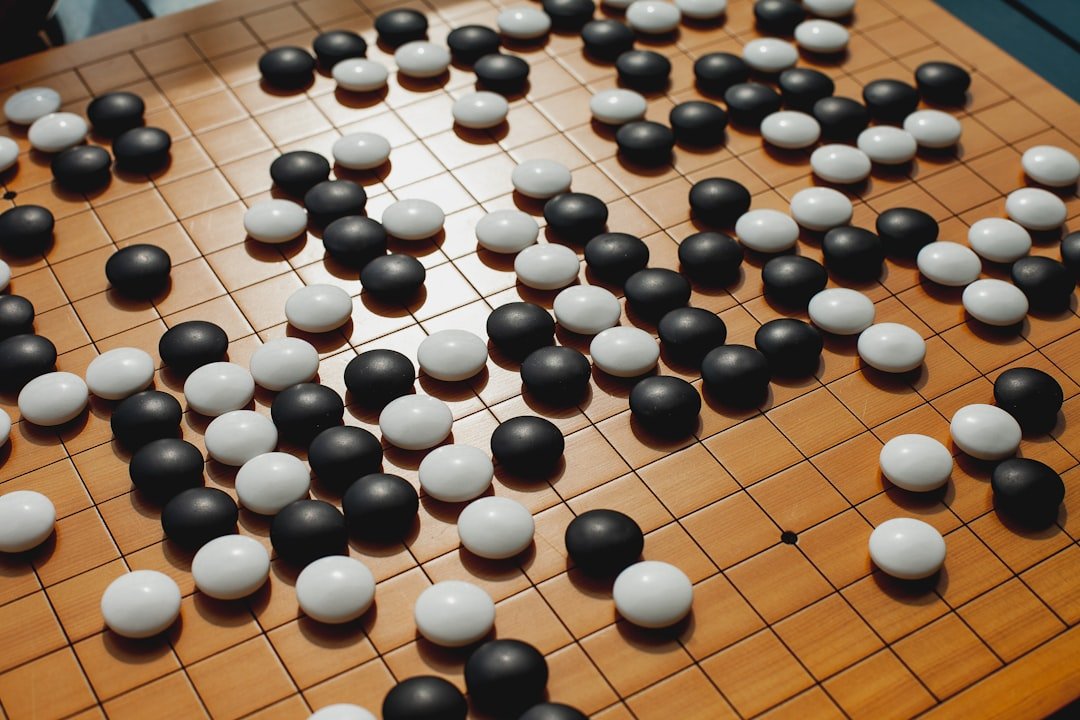

The Unseen Gambit: Humans vs Go AIs Through Adversarial Play

Go programs shattered human supremacy, then researchers did something mischievous: they taught amateurs to bait the machines. By exploiting blind spots in the AI’s training distribution, players could spring traps that a world champion would laugh off but the AI misread. It was a reminder that pattern-matching systems can be brilliant and brittle at the same time.

Professional players still lean on AI for study, yet this exploit showed that targeted creativity can puncture overwhelming strength. It’s not a return to human dominance – it’s proof that strategy includes knowing your opponent’s assumptions. In real-world domains, that translates into adversarial testing and constant red-teaming, not blind faith.

Steady Hands: Autonomous Suturing vs Surgical Masters

Soft-tissue surgery is a wobbly frontier, literally; organs move, stretch, and bleed. In carefully controlled trials on animal tissue, autonomous systems have stitched with striking uniformity, reducing leakage and spacing errors compared with manual runs. Robots thrive on steadiness, repeating precise motions through tremor and fatigue.

Surgeons, though, bring situational judgment – recognizing a subtle color change that hints at perfusion issues or deciding to abandon a plan mid-suture. The smart compromise is emerging in the OR: autonomy for narrow, repetitive tasks; humans for oversight and improvisation. That split frees skilled hands for problems that actually require a brain.

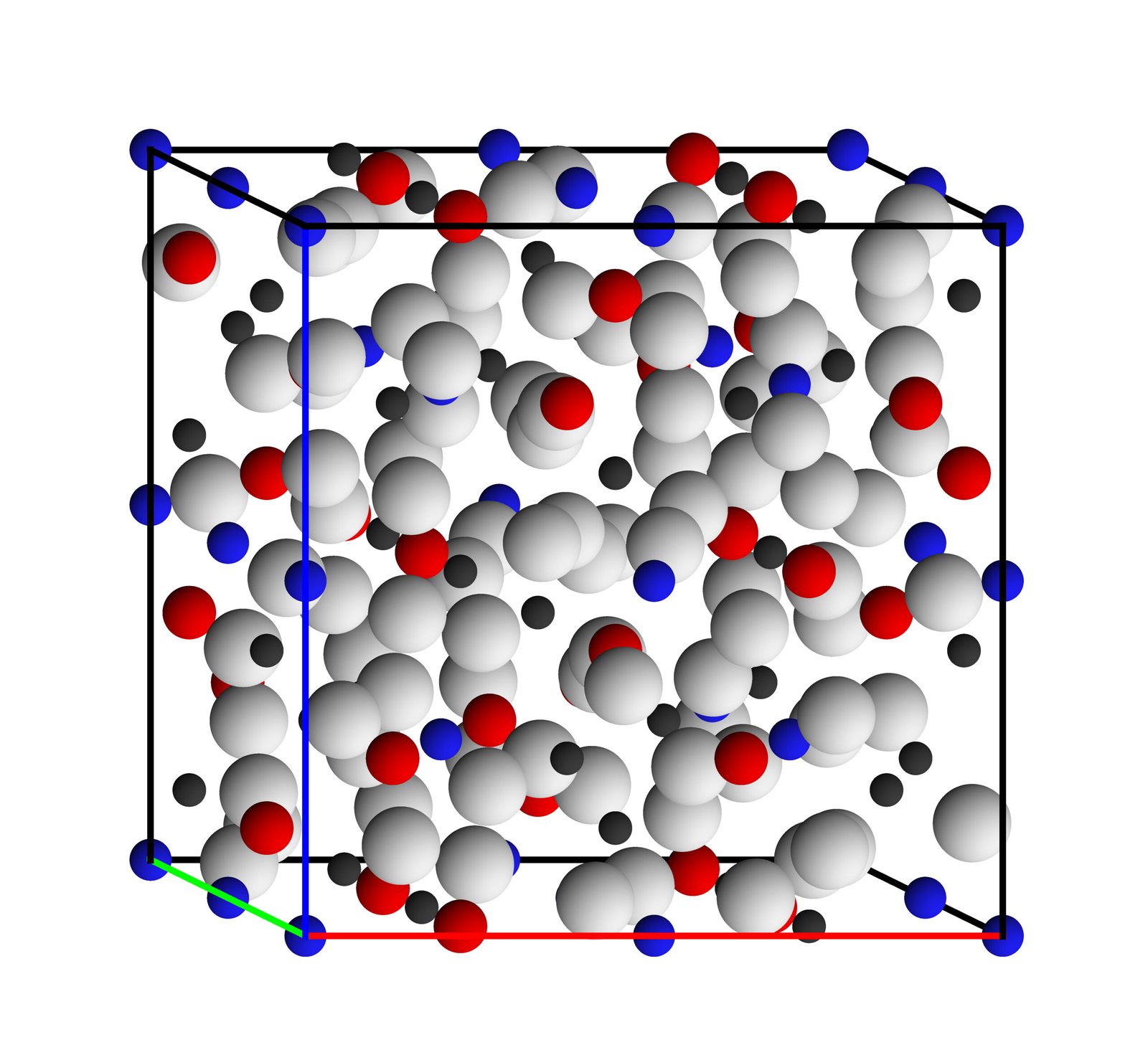

Alchemy Rewritten: Materials Discovery Engines vs Lab Intuition

For generations, new materials emerged from a mix of theory, serendipity, and a lot of furnace time. Now graph-based models predict which crystal structures should be stable before anyone melts a pellet, proposing hundreds of thousands of candidates in silico. Labs have already validated a growing slice, accelerating everything from battery cathodes to thermal barriers.

Is the machine winning? It’s more collaborative than that: algorithms map a landscape; chemists choose promising paths and handle the tricky syntheses. The effect is compounding – each experimental success refines the models, which then point to better targets. Discovery is speeding up without losing the craft.

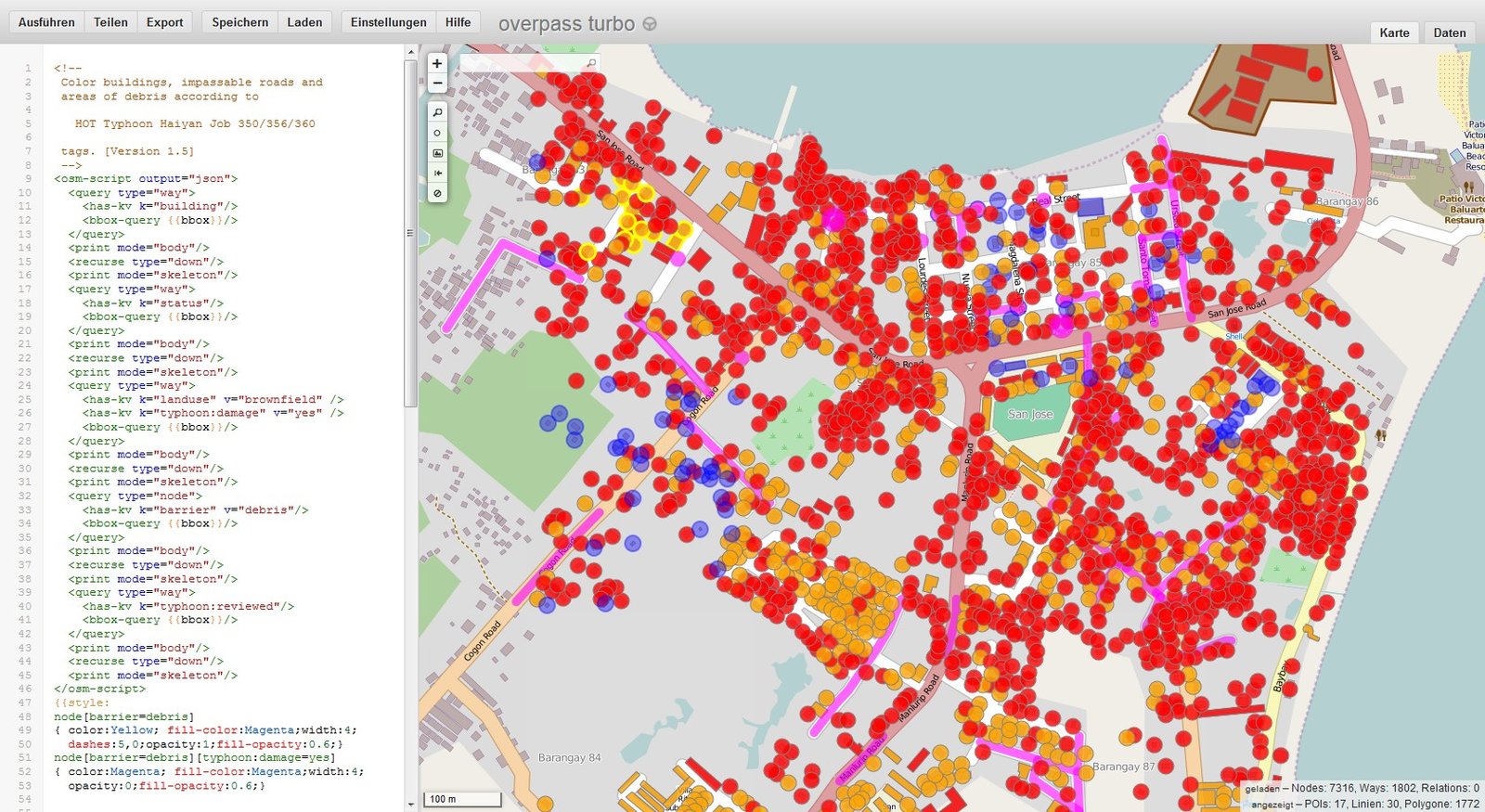

Maps in a Crisis: AI Damage Assessors vs Human Mappers

When rivers jump their banks or earthquakes hit at night, responders need maps overnight, not next week. AI systems trained on radar and optical satellite imagery can outline flooded roads, collapsed roofs, and blocked bridges while aircraft are still circling. Human volunteers and analysts then audit the machine output, adding context like evacuation routes and critical facilities.

The pairing keeps improving: machines chew through pixels; people prioritize, verify, and talk with crews on the ground. That division of labor means faster relief logistics and fewer blind spots in complex urban terrain. In disasters, speed without trust is dangerous – this workflow buys both.

Why It Matters: The Stakes of These Human–Machine Duels

The common thread across these battles is not replacement; it’s redistribution. Machines are absorbing repetitive perception tasks – spot the lesion, track the smoke, segment the flood – freeing humans to judge, explain, and decide. The biggest gains show up where error costs are high and time pressure is brutal.

Compared with old workflows, hybrid teams are delivering earlier alerts, steadier precision, and fewer backlogs. The trade-offs are real: bias in training data, brittleness under shift, and the risk of over-trusting neat outputs. The lesson is practical and a little uncomfortable – progress comes from pairing relentless automation with relentless skepticism.

The Future Landscape: What Comes Next – and How You Can Engage

Expect more foundation models tuned for domains: healthcare, earth systems, critical infrastructure. Scrutiny will intensify too – regulators and professional bodies are moving toward clearer audit trails, incident reporting, and real-world performance dashboards. Global competition will push speed; public trust will demand verification, stress tests, and transparent failure modes.

Here’s how to plug in without getting swept away: support open datasets for safety testing, back community wildfire-camera networks, and champion hospitals that publish validation results before deployment. If you code, contribute to open red-teaming toolkits; if you don’t, ask blunt questions when a service touts “AI-powered” claims. The real contest isn’t humans versus machines – it’s vigilance versus complacency. Are you rooting for the right side?

Suhail Ahmed is a passionate digital professional and nature enthusiast with over 8 years of experience in content strategy, SEO, web development, and digital operations. Alongside his freelance journey, Suhail actively contributes to nature and wildlife platforms like Discover Wildlife, where he channels his curiosity for the planet into engaging, educational storytelling.

With a strong background in managing digital ecosystems — from ecommerce stores and WordPress websites to social media and automation — Suhail merges technical precision with creative insight. His content reflects a rare balance: SEO-friendly yet deeply human, data-informed yet emotionally resonant.

Driven by a love for discovery and storytelling, Suhail believes in using digital platforms to amplify causes that matter — especially those protecting Earth’s biodiversity and inspiring sustainable living. Whether he’s managing online projects or crafting wildlife content, his goal remains the same: to inform, inspire, and leave a positive digital footprint.